meljor wrote:I never was much of a quake fan, so i don't know. Maybe quake doesn't rely as strong on fpu then?

And i didn't say it can't run games nicely because it can and a whole bunch of them as well, p2 is simply quite a bit faster in most of them.

Quake and Quake II are VERY FPU-intensive, but more so in the software renderers as they do weird things with pixel data buffering in the FP registers. (Quake I is also extremely P5-specific in its optimization, to the exclusion of P6 and everything else -though also seems to have an affinity for Socket 5/7/SS7 motherboards, possibly due to the direct-mapped board level cache arrangement, but I haven't seen tests with board level cache enabled/disabled to demonstrate this -using a K6-2+ or K6-III would be significant there with the onboard L2 putting things more in line with Slot 1/370 or Socket 😎

Quake II is P6-friendly, but excludes taking advantage of the quirks/efficiencies the K6 family and Cyrix CPUs offered.

MMX does most of what Quake's weird FPU pixel buffering/operations -and perspective correction caluclations- does better and faster -albeit with integer precision limits vs FP- and does so much more consistently across different CPUs. Unreal's software renderer should be a much more fair comparison of MMX-enabled CPUs of the era, though the non-MMX capable ones will look a bit bad. (even the 6x86MX with its relatively modest MMX unit will smoke the 6x86 classic at the same clock speeds)

As an asside: Unreal's software renderer has a pretty decenty selection of detail options accessed through the console (using the 'preferences' command) that can disable that ugly dithered 'fast translucency' (which doesn't speed things up much anyway, at least with MMX enabled) and you can also enabled/disable their pseudo bilinear filtering effect that's normally forced at resolutions over 400x300 (or force-disabled at lower res -and all resolutions on non-MMX CPUs)

I'm not sure if Unreal or Unreal Gold uses 3DNow! but given the way the software renderer works, it probably wouldn't have been tough to include. Hell, I wouldn't be surprised if they used MMX for the geometry engine rather than going floating-point there (aside from the P55C -with its relatively slow integer multiply- all the Socket 7 and Slot1/S370 -and socket 8- based CPUs can do integer matrix math faster in MMX than on the FPU). Given how 3DNow! is mostly just a floating-point extension of MMX's feature set, it wouldn't be tough to adapt the code to use that in place of some (or most or all) integer MMX ops. (the P5 family is the only one of the bunch that has a significantly faster FPU multiply than ALU -let alone MMX- with all others -including P6 Intel chips- having integer multiply faster or approximately as fast as FPU multiply -and usually with better parallelism given the greater superscalar optimization of the integer pipelines and broader wealth of register -and renamed registers- resources for ALU operations)

On accelerated software, a lot of engines/drivers just use plain (not special case -like Quake) FPU grunt for the 3D math and given the relatively light AI/logic portion of games of the period the CPU's geometry was the main bottleneck. Software rendering favors non-FPU ops more and more as resolution goes up (except for Quake's special case texture mapping engine where FPU ops get heavier -especially on divide- as you scale resolution) but this isn't the case for 3D cards. (res scales up with CPU geometry being the main limit until GPU fillrate hits and starts slowing things down at further res increases)

The K6-2's combination of very fast integer operations and strong MMX performance should make MMX-accelerated software renderers favor it more than most game types of the period.

Unreal's also a neat case as it supports 16 and 32-bit color when most other software renderers were 8-bit (256 color), and in 32-bit mode actually has some nicer shading/lighting than the Voodoo2/3 is capable of, albeit without texture filtering. (shading/lighting and fog lack the color banding and dithering artifacts of the Voodoo, though you're limited to no texture filtering or the fake dithered texture filtering -which looks OK at higher res, but it kind of depends how much you like blurred texures over raw nearest neighbor pixels -particularly with how high res Unreal textures are)

Another plus is texture resolution/detail has next to zero performance on the software renderer. Where 3D accelerators benefit a lot from dropping texture res (usually due to fitting better into texture caches -bigger deal on some GPUs than others, like Rage Pro and Riva 128 vs Voodoo2/3/Banshee) as pixel fillrate and not textel fillrate is the main limiting factor on the software renderer. (so you can just max out detail settings and swap out resolution alone for performance gain -32-bit rendering is slightly slower than 16-bit, but still not very noticeable and well worth the visual quality gain)

Plus, the Dirext3D renderer has some bugs (aside from crashing ones, there's a few things like depth and Z errors or scene clipping/culling errors that show up on a number of cards) so visual quality might not be better than software rendering depending on your preference. (especially with in-era cards and considering the AGP issues with some cards on SS7 boards) The Voodoo 2/3 seems to avoid most/all Direct3D errors, but you might as well run the Glide renderer anyway. (I'm not sure if the Glide renderer's geometry pipeline makes use of MMX or 3DNow! or whether it's super P5 or P6 schedule biased like some other games, but I somewhat doubt it's K6-schedule optimized as that -as nice as it would be- would take the most going out of their way to do -and 3DNow! optimization would be much much more worthwhile ... or an integer MMX geometry engine for that matter would benefit everything but P5)

And a note on integer vs FP: there's some added rounding headaches to deal with (or risk visual errors like seams between polygons) but on the whole, especially for stuff of this era, fixed-point geometry works perfectly fine and it was only the raw speed of the P5's FPU (and sluggest ALU multiply) that favored floating point geometry engines over integer or MMX based ones. (all the consoles prior to the Dreamcast used 16-bit or 32-bit integer math for their geometry, mostly with DSP coprocessors or fast CPU math, but the N64's GPU included a fixed-point -integer- vector processor more analagous to MMX -the Dreamcast's SH4 CPU had a floating point vector extentions more like 3DNow! or SSE -I think more akin to SSE as they featured 128-bit registers rather than 64-bit)

I'm not familiar with how many drivers or engines actually opted for integer based 3D geometry engines, but on paper there was plenty of reason to do so at the time. (an MMX geometry engine would be very fast on PII/Celeron processors as well as K6-2/III -and decently fast on K6 classic and Cyrix MX/MII or Pentium MMX) And prior to SSE becoming common, MMX was the most universal feature set offering the fastest 3D vertex performance potential, so plenty of reason to support it.

I know DirectX 6.x incuded 3DNow! (and eventually SSE) support, but I'm not sure if it falls back on raw FPU or MMX operation if both of those are absent.

DirextX 5.x based games would fall back on FPU-driven geometry engines almost for sure, though. (DirextX 5 included some sort of MMX support ... or detected its presence at least, but I don't think there was much optimization for it, let alone full geometry/T&L engines and while a raw ALU-based geometry engine would work well for everything except the P5 family -including P6- that sort of programming seemed just plain unpopular for whatever reason -even though a lot of common 3D accelerators from D3D5.x's heyday relied on integer based pipelines with precision errors such that FPU based geometry was rather pointless ... and even sloppy 16-bit integer geometry math with rounding errors wouldn't be very noticeable)

BTW since both 3dnow! and SSE are heavily used in 3dmark99 it is normal for your p2 to score much lower there.

Given 3DMark99 is a DirextX 6.x based benchmark, this should rely on the Dirext3D drivers installed and similarly benefit any games that use them. (which should be almost anything released from 1999 onward that wasn't OpenGL-specific or proprietary, and probably a number of 1998 releases too -like Unreal)

I've seem anecdotes about DirectX 7 dropping 3DNow! support, but I'm not sure this is correct. (might depend ont he drivers, but it's also notable that 3DNow and SSE will matter much less if you have hardware T&L enabled -a K6 classic, PII, and Celeron should all do around the same with a DirectX 7 engine at comparable clock speeds -Cyrix 6x86 too for that matter, though probably not close to its PR rating, maybe slightly better than equal clock but not a big margin)

3DMark 2000 with a Radeon or Geforce would likely even the playing field and (with sound disabled) render multimedia extensions moot as well, aside from possible physics tests. (3DNow! and SSE would be significant for physics handling)

melbar wrote:kanecvr wrote:

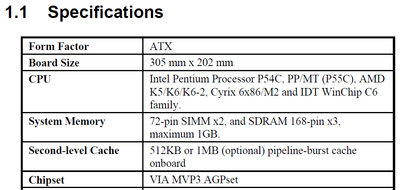

Do you really have 2Mb onbord on your Aopen? Well, when i download the original manual of the Aopen AX59PRO, this is written at specifications:

512KB or 1MB. Do you have a Deluxe version of this board. Anyway, it's used as L3 cache with your K6-III(+).

Sandra can tell the board level cache size pretty fast. (one of the easiest ways I found ... confirming on the motherboard itself can be troublesome as some cache SRAMs don't have easily accessible datasheets -and counting the number of cache chips isn't a good route as boards of that era used both 512kB and 1MB 64-bit pipeline burst SRAMs -though from most I've seen, all MVP3 boards that use 2 SRAM chips are 2MB while 1MB boards use a single 1 MB SRAM and 512kB boards use a single 512 kB SRAM -confusing given the PCB often has '512kB' printed on the unfilled SRAM spot, making one assume the single SRAM is only 512 kB -FIC's 503+ and 503A confused me with this)