Boy, are you opening a can of worms there.

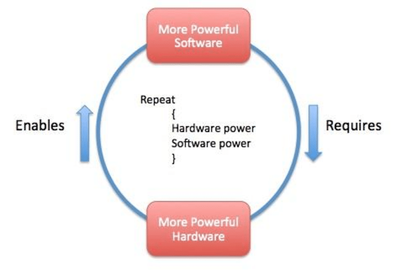

It's called the hardware-software-cycle.

Stole that from here where it's called a "virtuous cycle", bringing forth innovation and progress.

In a conventional way, it is.

But, while capitalism has its merits, this is one of its systemic failures: Commercial, closed sourced software is an obvious insult to human understanding.

Why would we subject a product with absolutely no marginal cost to the same production environment as a toothbrush? It's bound to deliver an inferior product, clearly rewarding unfavorable aspects of commerce like fraud and monopoly. Which is what we've sadly witnessed time and again during two decades of Microsoft dominance.

Why does it happen nontheless? Because systemic failure is, well, systemic. Manufacturers shipping their hardware with a free copy of Windows may not have planned for it, but it ensured their customers would soon buy a more potent system, giving the company an evolutionary edge over their competition. And so the cycle begins. It's the IT equivalent of (planned) obsolecence - the few parts of a system that actually do age physically won't come near that momentum.

While professional IT is mainly driven by productivity, innovation in private machines is a way of turning a mere tool into a conventional consumer product with a defined life cycle.

Why does open source dominate the server market? Because setting them up is the service rendered. And there's no point to artificially maxing out the hardware, it's scaled to purpose as it is, energy efficiency becoming the main criterion today.

For Apple and Microsoft, Dell and Intel, it remains good business and apart from huge losses to consoles, tablets and smart phones, TVs, speakers, the desktop/conventional laptop remains in technological deadlock.

On a macroeconomic level, abolishing all leagal protection for closed source software would yield a huge benefit, turning software developement from its head on its feet. So far, coercing customers into buying a copy gets rewarded while the actual service of developement remains opaque.

In practice: While luddism is fun, there are certain hard limits, no user being an island. Beyond the artificial need for an up to date Windows OS with an inherently unsafe architecture. The web, HD video do have some merit. I'd estimate those limits today between 1 GHz, 2 GB RAM or double that. 4 GB would mean Intel chipsets from the mid 2000s. Sure it's nice to do real time HD video editing at home, but how many really do? With 99.9 % of actual, real world screentime out there, anything that does not run is cheap programming.

Personally, anecdotal:

As an Ubuntu user since, IIRC, 8.04, I tend to get rather annoyed by the preachyness of Windows users. I can't even buy a Playboy magazine without dozens of headlines screaming at me things like "the new Windows xx and why it's awesome" "how to optimize Windows beyond a steaming shitpile" or "how do you make your shrink understand your nighmares about that insufficient swap partition".

Can't these people just use their strange bloatware in peace, without constant proselytizing?

I don't think I could reproduce a single unix shell command without mistake right away.

(Maybe 'll' but not sure what is does.)

But since roughly 2007, or since XP SP3 ultimately annoyed the shit out of me, I download a boot CD or thumbdrive, hook up the DSL cable and install a free OS with a modern GUI and that's it.

Browser, E-Mail, Office and multimedia tools, right out of the box. Rock stable, secure, fast and not a single EULA box to check.

Yet to find a random printer that won't work right away or screen with the wrong resolution.

I guess Windows is for nerds who need certain videogames on a machine made for work and communication or who can't do without some strange one-platform tools of their particular circle jerk.

Last time mommy's windows refused to acknoledge the duplex option of an HP laserjet 2xxx (read: THE most common printer on earth) despite 200 MB of dedicated driver package from some convoluted clusterfuck of a website - well, fixing that was a near-death experience. I'm getting to old for that shit.

And I seriously doubt the overall sanity of anyone hating on Bill Gates because some weird conspiracy tale about vaccines or GMO seeds.

OK, his daydreams about nuclear power show that he's detached from reality and in love with bullshit, yes. And he's rich, so there's room for a lot of Bond villain fantasy.

But why? That vile f*cker has quite openly visited endless suffering upon this world in his day job! And no amount of money and philantropy for the rest of his life is going to atone for that.

Why would you make up some more?

Why does any of that matter?

Isn't that all just fun and games in a free society?

Because we live on a finite planet and there's no planet B in sight. Especially IT is made with 60 % coal power.

Also, because, no average, non-nerd user I've ever met was happy about moving to a newer system. People are ever so alienated.