First post, by 386SX

Hello,

I open this thread to post something I've found and already tested in the past but never cared much at first, about last PCI video cards and their known limitations I always thought (many others I suppose too) the PCI bus and the less discussed IC bridge choice, were usually the bottleneck of such alternative uncommon products.

If I take a video card I've got for this example, the Geforce 210 (or later the Geforce GT610) in old PCI (with two different IC bridges) version when tested in a supposed supported correct o.s. like Win 8.x, I always found similar (not exactly) limitations testing old games/benchmark which weren't oriented for modern unified shaders architectures.

Most of all the triangles/s rate always limited up to a 15MT/s average speed (lower on a different IC bridge). I was thinking the PCI-EX to PCI bridge to PCI-EX1 GPU translation along the PCI bus limitation itself was the problem but testing the cards in Linux using the Wine app as a x86 Win app launcher and its (I suppose) D3D to OGL wrapper, I was expecting lower scores and instead the bench numbers are incredibly different and also the real visible frame rates and this is impressive cause it's mostly an API translated situation using a slow cpu like the old Atoms.

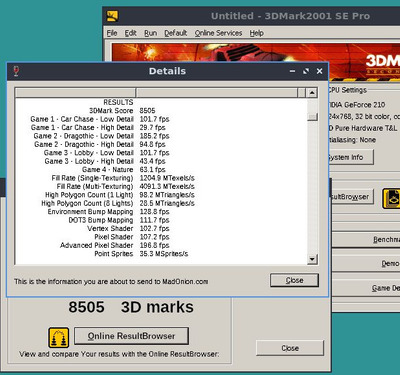

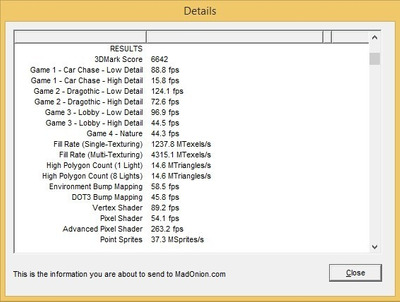

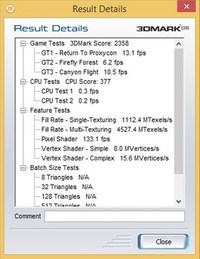

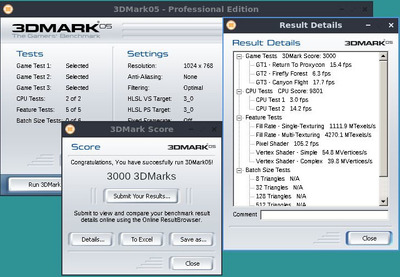

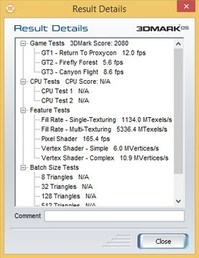

As an example, 3DMark2001SE in Win 8.1 results in 6600 average score when in "Linux-Wine-D3D>OGL" it results in 8505 score. Now looking at the synthetic numbers it's even more impressive, the triangle/s that was usually around a fixed 15MT/s results in a variable 98MT/s that decrease to 28MT/s with 8 lights test... and it's far from a wrong number, the tests are clearly MUCH faster. I'll test soon 3DMark05 but I'd expect similar results beside the GPU pixel shading performance. In-game random slowdowns are still there which shows the existing PCI bridged work but with its variable frame rate the difference still is impressive. So the PCI bus isn't (not totally) a problem here, the triangle/s almost 10x faster result shows that the 133MB/s PCI bus of the NM10 chipset should be enough in old games/bench compared to the usual results seen in modern Win tests these cards were oriented to at first and usually reviewed.

The only explanation I can think of is that most of these old bench/games not being compatible for any modern GPUs and their Win drivers and not even the GPUs themself were supposed to run old games which in facts run usually quite "slow" on modern Win o.s. (compared to their specs) while in Linux even adding the D3D>OGL translation, it probably use lower level OpenGL calls to force these GPUs for older games rendering. Modern o.s. seems to have both the WDM modern windows manager with "3D" GPU acceleration on and a much heavier background processes number added to the equation too and I suppose DX7/8/9 rendering runs in some "wrapper" logic too to keep the background win 3D accelerated GUI running or something like that which might cost in terms of speed. Which would make sense considering how fast modern GPUs are but probably not ideal for the old isolated Direct3D games logic fully using the GPU for the running game I imagine.

Maybe with the Win Compatibility Tool things could get better but it's not easy to find a good balance of tweaks in the app. I would not expect anyway much difference in the two o.s. with heavy pixel shaders modern bench like Unigine Valley or similar, where the problem is everything about the card GPU included, but at least in older games I wonder they could run faster.

Any opinions?

Thanks, bye.

Config: 1,9Ghz Atom, 8GB DDR3, Geforce 210 PCI 512MB

(1) 3DMark2001SE results with Linux x64, Wine 5.0 and latest NV linux drivers

(2) 3DMark2001SE results with Win 8.1 and latest NV Win drivers