leileilol wrote:V2 never had box filtering. That starts on Banshee/V3

You're probably describing "dither subtraction".

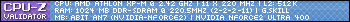

After further investigation I don't know if it is dither subtraction or not now, based on this info from my driver:

"Alphablending dither subtraction is disabled by default. Set SSTH3_ALPHADITHERMODE = 3 to enable dither subtraction;

4x4 dither matrics is used when alphablending dither subtraction is enabled. Other wise it's always 2x2"

source: http://www.3dfxzone.it/dir/news/3dfx/koolsmok … kit_27_12_2009/

I tried this and it did not make the screen nice and smooth in either Unreal or MiniGL Quake. I still get the 'dotty' dithering in dark areas. Not the 'smooth paste' effect from Voodoos I remember.

Check the screenshots in this thread:

http://www.3dfxzone.it/enboard/topic.asp?TOPI … 839&whichpage=2

He shows dither substraction on and off. Either way he doesn't have the 'pastey, smooth voodoo style' to either screenshot. But he does mention that 24bpp mode is not supported on V2 SLI: "you should try SSTV2_VIDEO_24BPP=1,it improves the IQ quite a bit,but its not compatible with SLI."

So perhaps the smoothness I am missing is the 24bpp I can't get on SLI.. maybe. What do you think?