SPBHM wrote on 2022-05-26, 19:00:

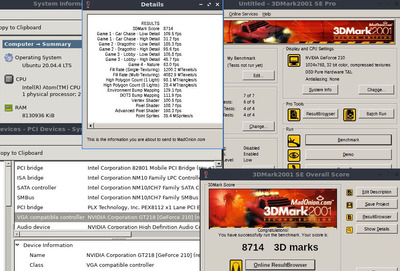

that's a result that I have with a 8400GS PCI (only 8 shaders...)

this was with a SiS chipset, I think I remember seeing some decent variation from one motherboard/chipset to another in terms of bandwidth;

I actually used the aida64 opencl copy test as a reference for the bandwidth, it was normally around 100MB/s on memory copy, but as I said with some decent variation between platforms;

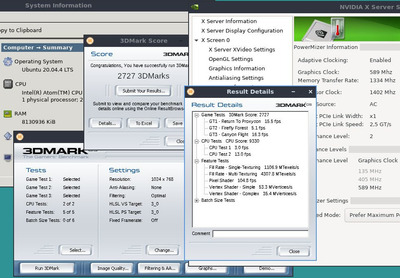

Interesting even if each different CPU will result in different 3DMark2001SE values here. But on the feature tests side I still see the 10MT/s average geometry values. It might be the way unified shader architectures with modern oriented o.s. drivers (retrocompatible with XP) works with older game engines and even using XP doesn't change that. If this was only a 3DMark2000 / 2001 problem it'd be understood being so old but on more modern bench like 3DMark05/06 well, those numbers I suppose has a different impact being so low.

At this point it'd be interesting to test in XP a PCI bridged card that didn't have a unified shaders design but still fully supporting Directx9 API and at that point I'd expect to see higher geometry and feature tests values considering the one the linux test I did with the equally really slow Geforce 210 PCI anyway seems to be high with Wine through OpenGL at the end frame/s are real and the same numbers should be seen in XP, oh well I'd expect even higher values.

Which might not mean a lot in real game scenario where the PCI communications most probably become soon very busy but still it's interesting. Like has been already said it'd make sense if some/many old features in modern drivers mantains compatibility in unified shaders GPUs but not intended to run fast or be retrogaming oriented and probably even 3DMark06 scenario might be considered old nowdays and running through a "compatibility" road, maybe not on a o.s. level but looking at the XP numbers I wonder if at a driver level.

For example I've tested Far Cry in D3D9 and OpenGL using Wine and here the situation seems less different from Win 8.1 with similar experience. @ 1024x768 HIgh details no AA frame rates are around 10 to 20 fps with the Geforce 210 PCI and more or less I think similar to what I remember in Win 8.1. Faster than the on board GMA gpu but not much surprising even in Linux. And strangely even the OpenGL native path of the game seems to not improve the frame rate here which suggest that probably this is a real game scenario where the PCI communication became busy easily.