Reply 80 of 173, by GloriousCow

- Rank

- Member

VileR wrote on 2023-11-19, 20:16:Ah, nice! Yeah, that looks pretty good for such a simple setup. I honestly think some of the features in that script of mine turned out to be overkill (rendering shadow masks at realistic sizes still sucks, for one - typical target resolutions make it look extremely artifacted). Would be nice to have some extra eye-candy, but 'nice to have' is a pretty low priority after all.

Eventually i'd like to implement librashader https://snowflakepowe.red/blog/introducing-li … ader-2023-01-14 which is a nice rust-based solution that would give MartyPC access to all those tasty Libretro shaders. Those will likely run in a full window. The nice thing about MartyPC's built in shader it it's applied by the scaler, so we can have those shader effects at configurable zoom levels and things.

VileR wrote on 2023-11-19, 20:16:I thought I saw a bit of vertical blurring which didn't seem to coincide with the darkening between scanlines. But on second thought, it may be enough to simply make that darkening stronger... 200-line CRTs typically show fully-separated scanlines, so that should increase the 'realism' factor as well.

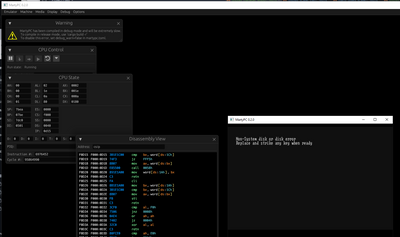

I think I see it now. I've moved aspect correction to the shader now and it looks a bit better. Resizing with a shader is still a bit tricky, ideally we would resize in two passes - once to the nearest integer scale nearest-neighbor, and then a final resize with linear filtering on. This would reduce the amount of blur. Although, I am still scan-doubling 200 line modes in software, which does help reduce the blur a bit.

VileR wrote on 2023-11-19, 20:16:My bad, bungled choice of words there... yeah, dumping works just fine; I meant looking at VRAM in the memory viewer.

Currently in 0.2 you will see VRAM in the memory viewer, but just the first plane. I need to figure out a better interface for choose what you are going to see.

VileR wrote on 2023-11-19, 20:16:Perhaps there's such a thing as a generic debugging front-end which could somehow be integrated? Admittedly such things often make for bigger headaches than rolling your own from scratch, but who knows... it's also true that Lua scripting engines are everywhere, so maybe that would be more straightforward than I think it is.

One thing that is commonly done is to implement a 'GDB stub', this allows any GDB client to connect to your emulator. VirtualXT does this. That's handy, but unfortunately doesn't support the 8088's segmented memory model, so setting breakpoints is not a lot of fun when you're breaking out the hex calculator every time to calculate a flat address. Despite that it might still be worth doing.

VileR wrote on 2023-11-19, 20:16:Oh yeah, you mentioned that 'mysterious console message' in the other thread about the half-height vertical blanking period. 😀 (So was the fix related to the scanline-counting thing, after all?)

Yeah, there were several fixes, to both CGA and CPU emulation, and that was one of them. I wasn't letting a counter overflow that needed to. On the CPU side I needed better HALT and resume-from-halt timings, and the bus sniffer traces helped me find a stupid bug where I wasn't ticking devices during interrupts. I've decided to eventually treat a hardware interrupt like a pseudo-instruction. From a microcode perspective, it pretty much is. Rather than tack the cycles from interrupts onto an existing instruction, eventually you'll see an "intr" mnemonic appear in the instruction history with those cycles. Then there's an interesting period where PIT channel timer #1 is set to '1'. I believe reenigne intended to quickly refresh all of DRAM before setting the counter to 19, but it seems that a value of 1 is too fast and actually disables DRAM refresh entirely - luckily it's not off long enough to cause problems, but it throws your timing off if you don't emulate that too. I think that's actually what kills Area5150 on the Book8088.

I was writing up a blog article on the process of debugging all that, but it got a little longwinded and cluttered with images, I don't know if I can make it an easier read or if I'll just scrap it...

MartyPC: A cycle-accurate IBM PC/XT emulator | https://github.com/dbalsom/martypc