First post, by mdrejhon

(I'm the founder of Blur Busters and TestUFO. I was making a reply to author of MartyPC emulator, but realized this was worth its very own thread topic)

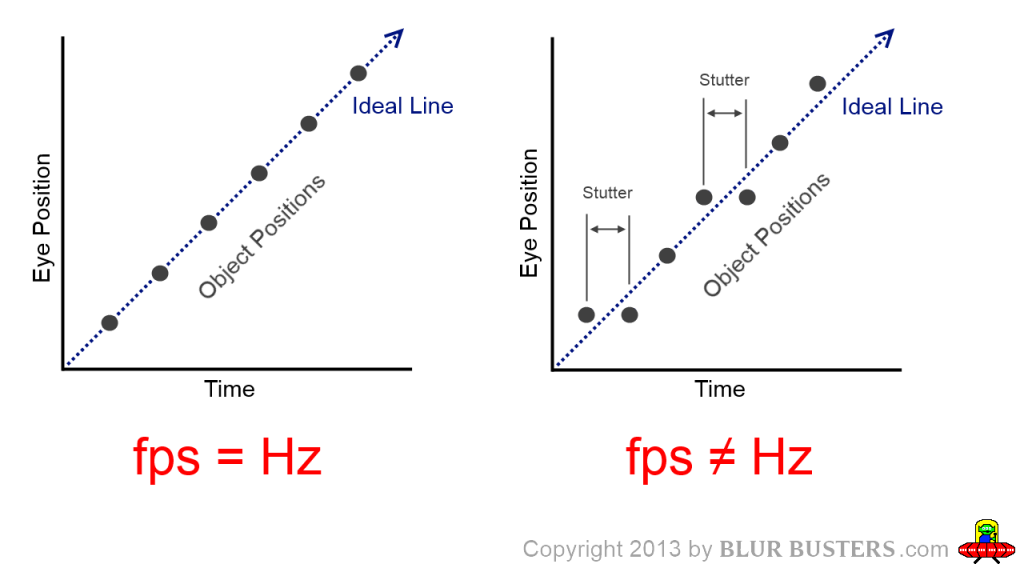

PURPOSE OF POST: Since there isn't an ultra-lowlag DOS emulator yet, this HOWTO is for PC/DOS emulator developers currently implementing beamraced graphics-adaptor engines (e.g. MartyPC), considering synchronizing the emulator raster to the real displays' raster. Such algorithms can reduce the input lag of an emulator to become nearly identical to an original machine.

Reply to MartyPC emulator developer:

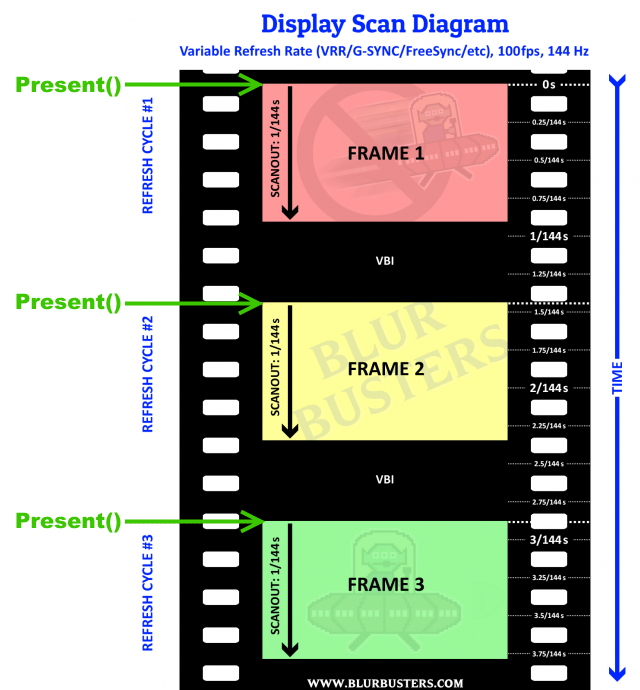

GloriousCow wrote on 2023-07-01, 18:14:mdrejhon wrote on 2023-07-01, 10:24:Some emulators (Toni's WinUAE and Tom Harte's CLK) now use my findings to create a new lagless VSYNC mode, with sub-refresh latencies, by Present()ing frameslices during VSYNC OFF mode later blocks of scanlines to the GPU even while the GPU is already video-outputting earlier scanlines, from an invention of mine called "frameslice beam racing"

This would be extremely cool to try to implement. Right now I'm double-buffering so I know there's a bit of latency, although at 60fps with most old PC titles it's probably not much of a concern(?) But since I'm already drawing the CGA in a clock by clock fashion there's nothing technically stopping me from sending as many screen updates as I want whenever, but I have to see if it would be possible with wgpu/Vulkan. I'm sort of at the mercy of that library, unless I port to another front-end. Can it be done through SDL?

If you can access at least these:

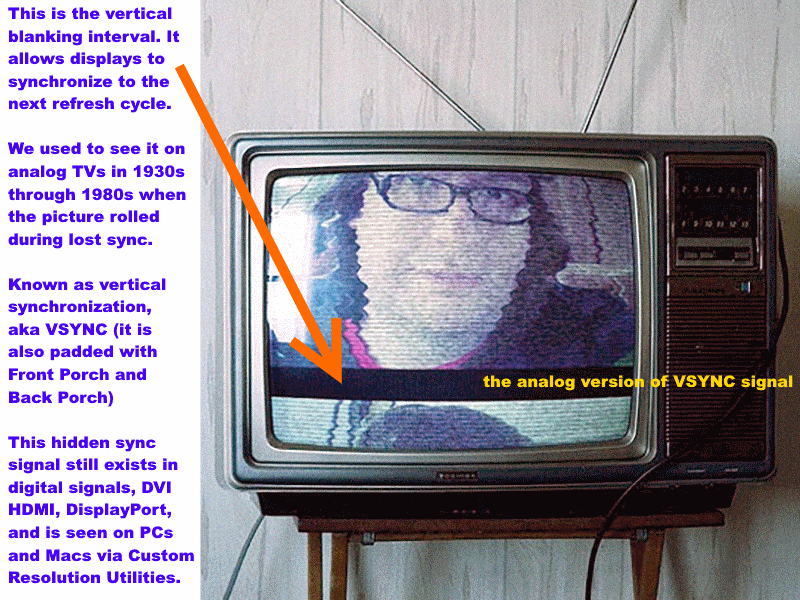

- VSYNC OFF tearing (tearlines = raster)

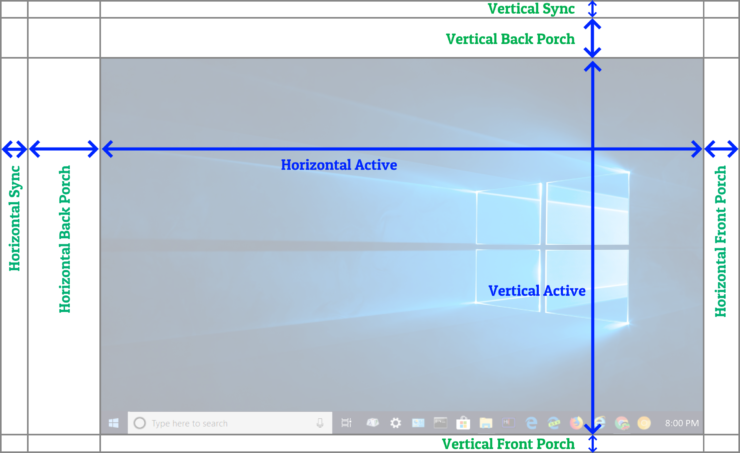

- Vertical Blanking timings OR raster poll

- Microsecond counter (RTDSC, getMicros(), curTime.tv_usec)

- Ability to time reasonably accurately (e.g. CPU busywait loop before frame present+flush).

Then yes. Any API -- Vulkan, DirectX, OpenGL, SDL, etc. Yes, you can "Atari TIA" those APIs!

A bit of fun unsolvable raster jitter (due to timing imperfections of modern computing) but still recognizable beam racing feats like Kefrens Bars.

A raster poll (e.g. D3DKMTGetScanLine() on Windows systems) is useful -- But you can skip it by using time offsets between vertical blankings. Your raceahead safety margin can handle the error margin of not knowing exact scanline number. There are VSYNC listeners available on most platforms (Linux, Android, PC, Mac), which can be used to create the timestamps necessary to create the "estimated raster" to allow you to control the exact position of a VSYNC OFF tearline (YouTube video example) to be near the real-world raster.

Doesn't matter what GPU graphics API, as long you have VSYNC OFF, as you can quickly flush your simple frame out, at a precise CPU-busywaited moment, during high/realtime priority mode. You MUST flush, to avoid the asynchronous behaviors of a GPU. The RTDSC timestamp of return from a flush, creates a tearline almost perfectly time-aligned (mere 4 pixel rows) to the real world raster! My GeForce RTX 3080 is more heavily pipelined, and Windows 11 a little less realtime than Windows 7 was (when Tearline Jedi first ran), so I'm weirdly getting fewer frameslices per second on my RTX 3080 than my GTX 1080 Ti -- there is a high overhead cost of trying to do 5000-8000fps of 1-pixel rows (vertically streched between two tearlines).

In treating a GeForce like an Atari TIA, I succesfully got Kefrens Bars working on both OpenGL and DirectX, in MonoGame engine, in C# programming. Here's a video:

Every single "pixel row" of the Kefrens bars is a VSYNC OFF tearline! This screen is impossible to screenshot, if you hit PrintScreen, you only get one pixel row of the kefrens bars, vertically stretched full screen height.

(Nomenclature here, "VSYNC OFF" doesn't remove the VSYNC from signal. It simply means to tell the graphics API to ignore VSYNC when timing the output to video port.)

Watch the crazy fun raster jitter when I move the window.

For Kefrens, I had to intentionally show every single tearline (8000 tearlines per second) to do it.

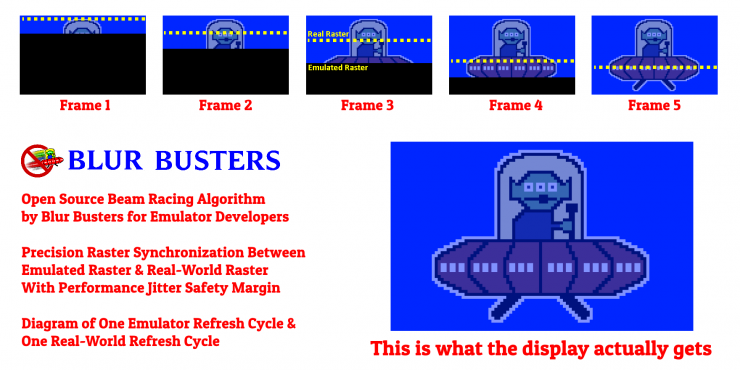

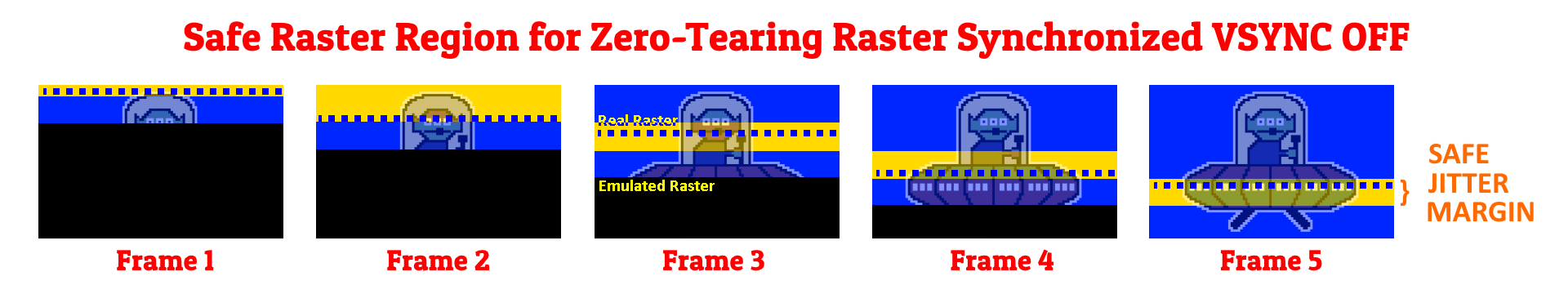

But for emulators, you intentionally hide the tearing. You simply keep the tearing AHEAD of the realworld raster, so you have a perfect tearingless VSYNC OFF mode that looks like VSYNC ON.

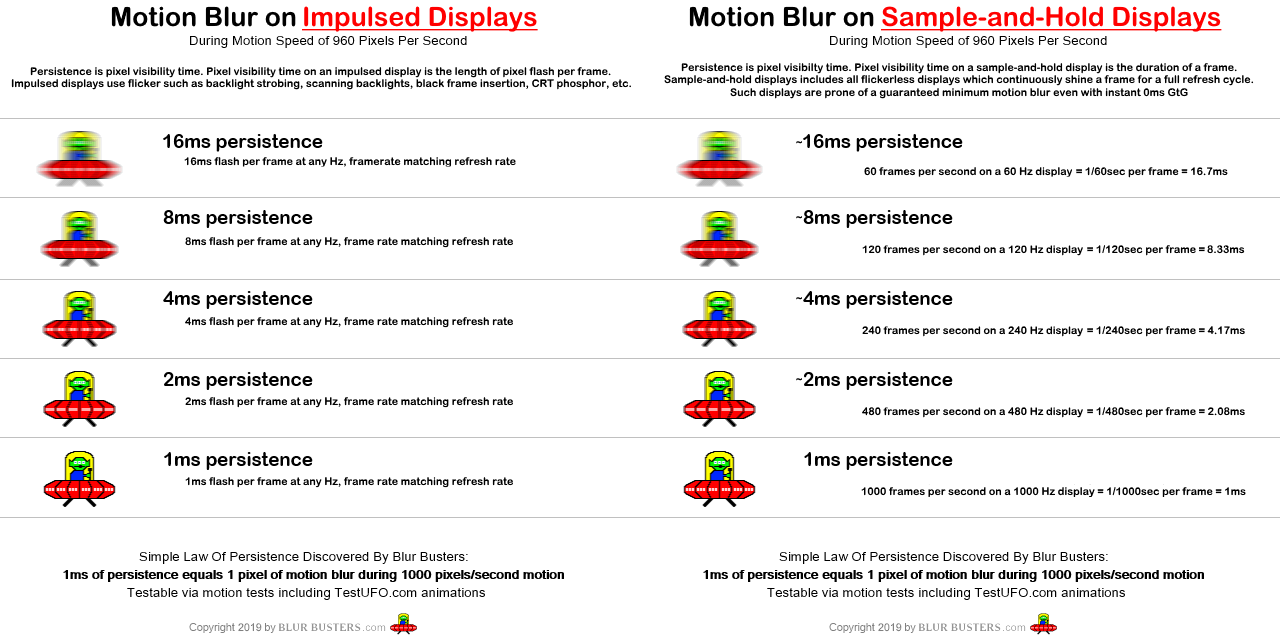

Slow systems (e.g. GPU on a Raspberry Pi) may only be able to do low frameslice counts (e.g. 240fps -- 4 frameslices for 1/4 refresh cycle lag). But subrefresh latency on a Raspberry pi is amazing to achieve.

Fast systems (e.g. NVIDIA or Radeon GPU) can easily do dozens of frameslices per refresh cycle, sometimes hundreds! My GTX 1080Ti could do about 100-125 frameslices per refresh cycle, enough for a true-raster Kefrens Bars demo. It's crazy to treat a 2023 GPU like an Atari TIA in C#!

This algorithm is already implemented in WinUAE Amiga Emulator, as the "lagless vsync" option.

The algorithm is very highly scalable, depending on how performance-jittery your platform is.

Slow systems or software with lots of background software, will need larger raceahead margins / taller frameslices.

Fast systems with precise behaviors (clean Windows 10 installs with certain GPUs) can do amazing sub-millisecond latency Present()-to-GPU-signal!

It'd be cool to someday add to RetroPie for low-spec machines, even if it can only do 2-4 frameslices per refresh cycle -- that's still subrefresh latency without RunAhead (which is very CPU-hungry).

I have a ~$500 BountySource to add this to RetroArch: https://github.com/libretro/RetroArch/issues/6984

Emulator developers don't usually know how to beam-race modern GPUs, due to the massive variances of end-user systems. But you can make it a toggleable ON/OFF option, so people who get too many glitches, can simply keep it turned off.

Best Pratices for Emulator Developers (new findings as of 2023)

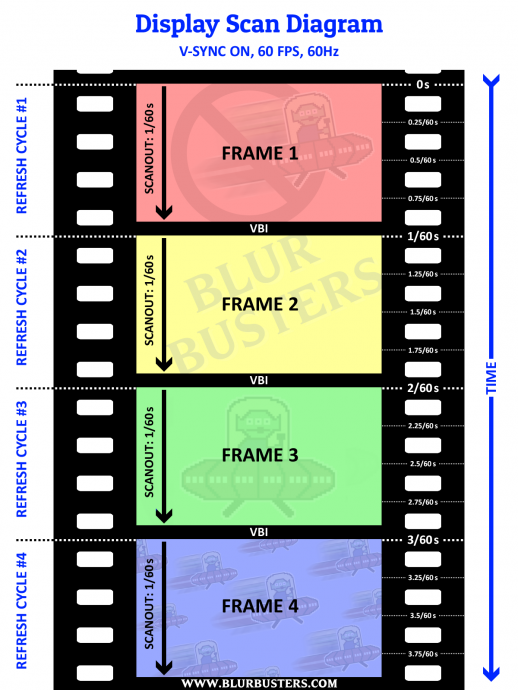

- Only works if you can get (A) intentional tearing, (B) raster poll or estimate the raster as offsets between vsync, and (C) precise timing via microsecond counter.

For Windows, you typically need full screen exclusive + VSYNC OFF, in order to get intentional tearing. - For estimating a raster poll as offsets between vsync's, you need to find a reliable way to get fairly accurate vsync timestamps, whether via a CPU thread, a listener, a VSYNC estimator that averages over several refresh cycles (and ignores missed vsyncs), a startup VSYNC ON-listener that goes into deadreckoning mode when switching to VSYNC OFF, etc. Many ways to estimate a raster poll in a cross-platform manner (platform-specific wrappers).

- Make it configurable (on/off, frameslice count, raceahead margin, etc), perhaps conservatively autoconfigured by a initial self-benchmarker.

- Use CPU busywaits, not timers. Timers aren't always precise enough.

- Always flush after frame presentation, or make flush configurable. GPUs are pipelined, so you must flush to get deterministic-enough behaviors for beam racing. The return from the flush will be approx raster time aligned to tear line, and jitter in return timestamps can be used to guesstimate rather jitter (and maybe automatically warn/disable beam racing if it always massively jitters too much)

- Detect your screen refresh rate and compensate. If your VSYNC dejitterer has a refresh rate estimator, use that! (Examples of existing VSYNC-dejittering estimators include https://github.com/blurbusters/RefreshRateCalculator and https://github.com/ad8e/vsync_blurbusters/blo … /main/vsync.cpp ) Usually it's best that the refresh rate is the same as the refresh rate you want to emulate. If there's a refresh rate mismatch, you can fast-beamrace specific refresh cycles (by running your beamraced emulator engine faster in sync with the faster refresh cycles). WinUAE does this on 120Hz, 180Hz and 240Hz (NTSC) and 100Hz, 150Hz, 200Hz (PAL). At 120Hz, emulator-beamracing every other refresh cycle (so emulator is idling/paused every other refresh cycle).

- (OPTIONAL) Detect your current screen rotation and make sure realworld scanout direction is same as emulator scanout direction. Signal is only top-to-bottom in default rotation. So if you are an arcade emulator, and you want to frameslice beamrace Galaga, you will have to rotate your LCD computer monitor for subrefresh latency. There's display rotation APIs on all platforms that you can call to check. For legacy PC emulator developers, this will be largely unnecessary as it's always top-to-bottom scanout direction until the first version of Windows that supported screen rotation. So simply disable "lagless vsync" whenever you're not in default rotation.

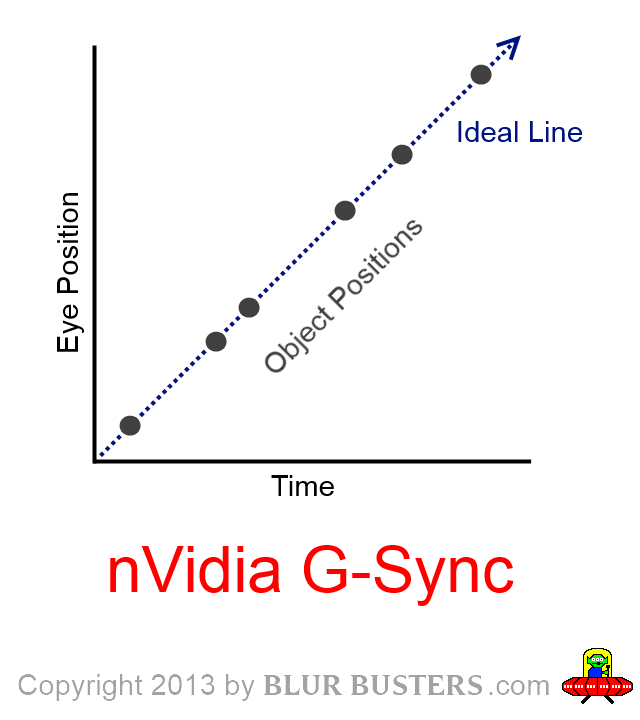

- (OPTIONAL) Detect whether VRR (FreeSync/G-SYNC) is enabled, and warn/turn off/compensate beamracing if VRR is enabled. If VRR is enabled, you can disable beamracing, or simply interrupt your VRR refresh cycle via VSYNC OFF. You can still do multiple emulated refresh rates flawlessly via 60Hz and 70Hz via VRR and still beamrace-sync VRR refresh cycles (with some caveats). WinUAE is currently the only emulator successfully beamracing GSYNC/FreeSync. For developers not familiar with how a VRR display refreshes -- it is not initially intuitive to developers who don't fully understand VRR yet. If you choose to beam-race-sync (lagless vsync) your VRR refresh cycles, you need "GSYNC + VSYNC OFF" in NVIDIA Control Panel. What happens is that the first Present() (while the display is idling waiting for new refresh cycles) triggers the display to start the refresh cycle, and subsequent sub-refresh Present() will tearline-in new frameslices if the current VRR refresh cycle is scanning-out. The OS raster polls (e.g. D3DKMTGetScanLine) will also still work on VRR refresh cycles. I do, however, suggest not trying to make your "lagless vsync" algorithm VRR compatible initially -- start with the easy stuff (emuHz=realHz) and iterate-in the new capabilities later.

- Turn off power management (Performance Mode), or warn user if battery-saving power modes are enabled.

- GPU drivers will automatically go into power management if it idles for a millisecond. This adds godawful timing jitters that kills the raster effects. Don't use too-low frameslice counts on high-performance GPUs, you only want hundreds of microseconds (or less) to elapse from a return from Flush() before the next Present(). If you must idle the GPU a lot, then prod/thrash the GPU with changed frames (change a few dummy pixels in an invisible area (e.g. ahead of the beam) about 1-2 milliseconds before your timing-accurate frameslice). So raster effects with only a few splits per screens (e.g. splitscreens) will perform timing-worse than raster effects that are continuous (e.g. Kefrens), due to the mid-refresh-cycle power management a GPU is oding.

- CPU priority, application priority, and thread affinity helps A LOT. "REALTIME" priority is ideal, but be careful not to starve the rest of the system (e.g. mouse driver = sluggish cursor). "HIGH PRIORITY" for both process and for the beamrace present-flush thread, is key.

- It's best to overwrite a copy of the previous refresh-cycle emulator framebuffer with new scanlines (and frameslice-beamrace a fragment of that framebuffer), rather than blank/new frame buffer. That way, raster glitches from momentary performance issues (like a virus scanner suddenly running, and beam racing too late) will show as simple intermittent tearing artifacts instead of black flickers.

- The first few scanlines of a refresh cycles are pretty tricky because of background processing done by desktop compositors systems like Windows dwm.exe. I can't ever get a tearline to appear in the first ~30 scanlines of my GeForce RTX on Windows 11. Factor this in, possibly by using larger raceahead margin, or taller frameslices, or variable-height frameslices (with taller for top of screen).

(P.S. On a related "beam racing topic" - if anyone wants to create the world's first crossplatform rasterdemo for a future democomp (superior to my incomplete WIP Tearline Jedi), I'm happy to help out, as long as I'm in the credits. Though, it's tough if you can't predict the demo platform, and you don't have much time to do impressive graphics when turning a GPU into a TIA, especially at only 25-50-100-200 "pixel rows" in Kefrens, depending on how performant the platform is. But this is crossplatform Kefrens!)

Hope my tips helps your emulator development!

I must admit though, input lag on an IBM 5150, especially in a demoplayer emulator, is probably not a high consideration. However, if your emulator veers into a wide variety of emulation (e.g. Keen, Wolfenstien 3D and DOOM), then you should consider adding this lagless vsync mode. A lot of fast-twitch games (FPS, fighting games, pinball, etc) really benefit from these latency reductions.

Then you get to toot your horn as the "world's first subrefresh-latency DOS emulator!" and "Emulation-to-photons within 1ms of original machine latency!"

Founder of www.blurbusters.com and www.testufo.com

- Research Portal

- Beam Racing Modern GPUs

- Lagless VSYNC for Emulators