First post, by feipoa

- Rank

- l33t++

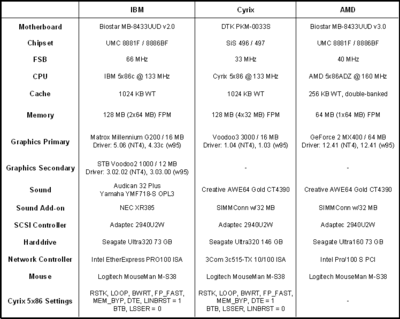

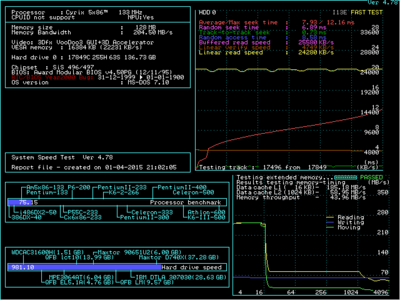

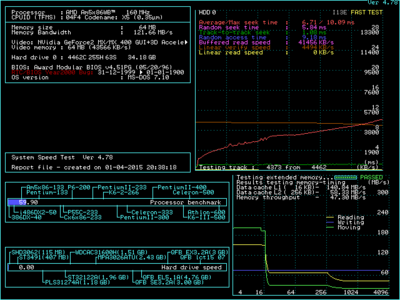

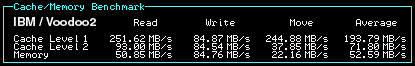

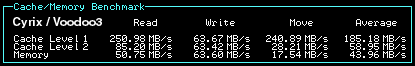

The term 486 is used loosely here to mean non-Pentium socket 3 systems. I have the 3 cased socket 3 systems with the hardware noted in the following table.

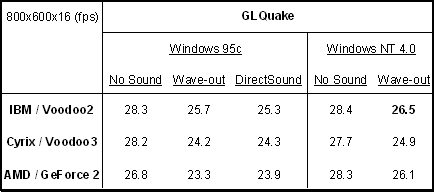

I first looked at GLQuake's timedemo 1 result (without sound) at 800x600 in NT4 and Win95c. Each system peformed at around 28 fps. When sound was enabled, the wave-out sound exhibited a slight improvement over DirectSound. Results in NT4 exhibited a slight improvement over Win95c. When using the GeForce 2, DirectSound had a slight benefit over Wave-out.

For the overall best GLQuake score with sound, the IBM 5x86c-133 system with a 66 MHz FSB and the Voodoo2 took the lead at 26.5 fps. The AMD 5x86-160 with GF2 was a near second at 26.1 fps, while the Cyrix 5x86-133 with Voodoo3 took up the rear at 24.9 fps. Note that the GF2 will not function properly with Cyrix/IBM 5x86 chips and the Voodoo3 will not function in UMC8881-based motherboards. I had hoped to reach the 30 fps threshold on the IBM/Voodoo2 system, but that goal seems unobtainable.

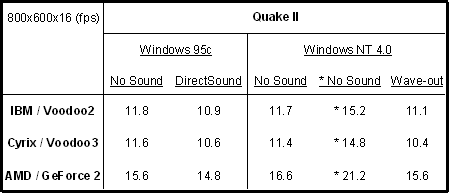

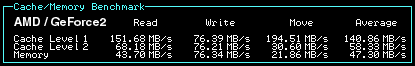

Upon looking at the Quake II results, what stands out the most is the incredible jump in performance of the AMD/GF2 system compared to the Voodoo2 and Voodoo3 systems. What technology does the GF2 card have which enabled such a performance hike in only Quake II and not GLQuake?

In an attempt to make Quake II run faster on a 486, I ran a benchmark with these settings: gl_flashblend 1, cl_gun 0, cl_particles 0, gl_dynamic 0. This resulted in * 21.2 FPS without sound.

* gl_flashblend 1, cl_gun 0, cl_particles 0, gl_dynamic 0

Resolution at 800x600x16. Results in frames per second. Default Quake settings used. GLQuake MiniGL v1.40. Quake II MiniGL v1.46.

Plan your life wisely, you'll be dead before you know it.