Reply 60 of 264, by robertmo

- Rank

- l33t++

riva 128 (1997) 4 MB 100$

riva tnt (1998) 16 MB

geforce (1999) 32 MB 100$

riva 128 (1997) 4 MB 100$

riva tnt (1998) 16 MB

geforce (1999) 32 MB 100$

GPUs are getting stronger while games are getting worse. I don't care about 2000 FPS and visual quality if the content is unoriginal, the outcome is buggy and the microtransactions "force" gamers to pump more money for a better experience.

A few decent titles won't worth the 700$ now, most people will wait a few years anyway until the price drops to a sane level, they'll use midrange and older top cards until then. Overclockers and hardcore gamers already broke the system with all the preorders so the interest is high as usual.

If only A-game developers would be so enthusiastic about quality...

I'll be fine with my 150$ GTX 1080 for a while.

Am I excited for this new tech?

Not really.

I've always been lagging behind when it comes to computer hardware.

Actually I'm very happy with my RX 570 and 580 in my PC's, I think Just Cause 2 looks amazing and that game is 10 years old.

I have to give credit to Omnividia Consumer Products for playing the hype machine though.

Everyone remember the RTX 2080, the card that brought us raytracing and dlss?

Well, I hope anyone who bought one got their moneys worth. Not saying that that raytracing or dlss is a bad thing, but the support it got from gamedevelopers was (understandably) abbysmal. These things take a lot of time to get adopted and what you got for a lot of spending on a RTX 2080 was a minor upgrade in performance (and sometimes not even that) from the GTX 1080.

https://www.youtube.com/watch?v=tu7pxJXBBn8

I advise never to listen to those graphicswhores at Digital Foundry by the way.

Procyon wrote on 2020-09-21, 09:23:I advise never to listen to those graphicswhores at Digital Foundry by the way.

When buying GPUs, graphic whores are who you should listen to.

You shouldn't listen to Digital Foundry because they agreed to showcasing the Nvidia RTX 3080 entirely on Nvidia's terms.

Quadrachewski wrote on 2020-09-21, 08:08:GPUs are getting stronger while games are getting worse. I don't care about 2000 FPS and visual quality if the content is unoriginal, the outcome is buggy and the microtransactions "force" gamers to pump more money for a better experience.

If you're looking at games with microtransactions, you're looking at the wrong place if you want quality games.

ZellSF wrote on 2020-09-21, 10:17:If you're looking at games with microtransactions, you're looking at the wrong place if you want quality games.

They simply ran out of good ideas so they started to reuse old ones. Of course gamers already got used to interesting and quality games so it's natural that some of them have higher expectations. Short deadlines lead to poor ideas and endless titles, less time for bugfix, more focus on quantity than quality.

It's a subjective matter but I'm still not investing in expensive hardware for the sake of the 2-3 good titles/year and I'm not talking about the 32th (!) COD or the 16th GTA episode. Developers are not even trying anymore, no wonder so many people return to old classics that require a Geforce 2 or a Radeon 3850 at most.

To each their own.

I'm going to upgrade my 1050ti to a 3080 (along with my i5-7500 to a Ryzen, of course a motherboard + PSU upgrade, and replacing my modern gaming case with a "modern" sleeper case) just to play a couple newer games that I'm interested in. Most stuff put out nowadays is complete garbage, so it's a bottom priority for me. Would rather just focus on collecting old computer stuff and big box PC games

can we rename this thread to "who's excited for big navi"?

Until AMD bellies up to the bar, prices are going to be stupid.

Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them. - Reverend Mother Gaius Helen Mohiam

Quadrachewski wrote on 2020-09-21, 11:23:They simply ran out of good ideas so they started to reuse old ones. Of course gamers already got used to interesting and quality games so it's natural that some of them have higher expectations. Short deadlines lead to poor ideas and endless titles, less time for bugfix, more focus on quantity than quality.

It's a subjective matter but I'm still not investing in expensive hardware for the sake of the 2-3 good titles/year and I'm not talking about the 32th (!) COD or the 16th GTA episode. Developers are not even trying anymore, no wonder so many people return to old classics that require a Geforce 2 or a Radeon 3850 at most.

To each their own.

Everything you're complaining about in this post was prevalent back then. Personal bias just blinds you to this.

Which is fine, but I still want to re-iterate that you're missing out on some great games because you've already decided not to look for them.

luckybob wrote on 2020-09-23, 07:15:can we rename this thread to "who's excited for big navi"?

Until AMD bellies up to the bar, prices are going to be stupid.

According to Gamers Nexus, this isn't a paper launch and more cards will be available pretty quickly.

Count me out of being excited for big navi anyway, dgVoodoo compatibility problems means I won't be getting an AMD card.

nvidia sent linus THAT tv and only one card 😉

https://www.youtube.com/watch?v=JDUnSsx62j8

ZellSF wrote on 2020-09-23, 07:52:...Count me out of being excited for big navi anyway, dgVoodoo compatibility problems means I won't be getting an AMD card.

That seems like a quite specific reason to rule out the entire AMD range. Considering you can emulate glide on a basic GeForce 6200 (one of Phils many videos or something) and surely pretty much everyone on this site has cards of that ilk, it seems weird to rule out 50% of graphics cards for this one very specific feature when it may save you hundreds of pounds over an Nvidia offering? I also struggle to believe even a low end big navi will struggle to emulate a 20 year old API!

Almoststew1990 wrote on 2020-09-23, 21:36:ZellSF wrote on 2020-09-23, 07:52:...Count me out of being excited for big navi anyway, dgVoodoo compatibility problems means I won't be getting an AMD card.

That seems like a quite specific reason to rule out the entire AMD range. Considering you can emulate glide on a basic GeForce 6200 (one of Phils many videos or something) and surely pretty much everyone on this site has cards of that ilk, it seems weird to rule out 50% of graphics cards for this one very specific feature when it may save you hundreds of pounds over an Nvidia offering? I also struggle to believe even a low end big navi will struggle to emulate a 20 year old API!

dgVoodoo isn't just a glide wrapper, it's a Direct3D wrapper. It's the only way to play a lot of old games in higher resolutions, or with better scaling (for 2D games). Using multiple GPUs is a pain.

The word "struggle" is also weird. It's a compatibility issue, not a power issue.

1997 Riva 128 4 MB

1998 Riva TNT 16 MB

1999 Geforce DDR 64 MB

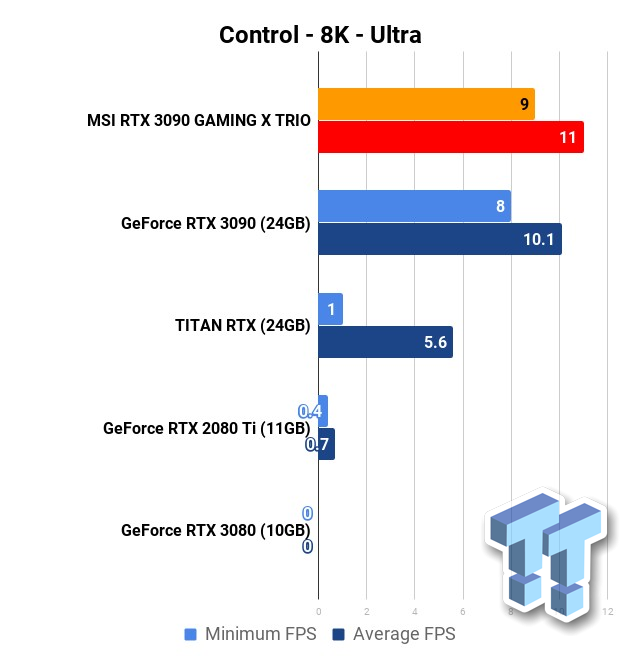

3090 reviews

https://wccftech.com/review/nvidia-geforce-rt … -true-bfgpu/11/

https://www.tweaktown.com/reviews/9603/msi-ge … trio/index.html

Looks like vulkan games get this biggest jump.

240fps at 1440p in Doom Eternal

It's also pretty neat to see how even at 1080p you can still get an benefit.

😀

the funny thing even if you could use four 3080 cards in sli it gives 0 fps too 😀

i don`t see why i should buy such a thing.

we paid 200€ or so for a 3Ti back then and it was Really the fastest Thing you could buy.

But now the Prices are increasing to insane Levels.

Even a shitty old 1050Ti is still buyable at a pricing near the 1660.

I get that 2K on say a 32" or larger monitor may not be defined enough for some people and that 4K might might be preferable, even though I am happy with 2K . 8K, however, I don't get at all, unless wants to use a really big/monitor or TV and sit so close to it that the difference is actually distinguishable . I have a 4K 55" TV and I don't think I would be able to see a diiference between it and an 8K TV at unless I was less than 2 feet away.

Do I need new eyeballs and possibly a new brain, or is 8K getting close to pointless for most use cases ?

DosFreak wrote on 2020-09-26, 18:24:

Factory overclocked cards are such a bad idea in the first place.

darry wrote on 2020-09-26, 17:25:I get that 2K on say a 32" or larger monitor may not be defined enough for some people and that 4K might might be preferable

4K is an improvement over "2K" even with a 27" monitor.

darry wrote on 2020-09-26, 17:25:Do I need new eyeballs and possibly a new brain, or is 8K getting close to pointless for most use cases ?

You might not need a 8K display, but rendering at 8K can have lots of advantages that will be very noticeable at larger distances/smaller display sizes.

robertmo wrote on 2020-09-25, 05:19:

More interested in if the 3080 fails to render something the 3090 can render at playable framerates. That will say something about if 10GB VRAM is a problem or not.

ZellSF wrote on 2020-09-26, 18:59:4K is an improvement over "2K" even with a 27" monitor. […]

darry wrote on 2020-09-26, 17:25:I get that 2K on say a 32" or larger monitor may not be defined enough for some people and that 4K might might be preferable

4K is an improvement over "2K" even with a 27" monitor.

darry wrote on 2020-09-26, 17:25:Do I need new eyeballs and possibly a new brain, or is 8K getting close to pointless for most use cases ?

You might not need a 8K display, but rendering at 8K can have lots of advantages that will be very noticeable at larger distances/smaller display sizes.

I am not saying a higher resolution isn't noticeable. Distinguishing 4K from 2K on a 27" display is definitely possible . I used 32" as an example because that is what I happen to use and I would not be against upgrading that to 4K someday, though I see no need to hurry which is of, course, based on my personal subjective opinion/taste .

The point that I am trying to make, or more accurately the question I have is just how noticeable the jump to 8K will be. I have my doubts about the "very noticeable" aspect, especially if rendering is done at 8K only to have the results donwnsample to for display on a lower resolution display (effectively anti-aliasing, which is great, but also less and less useful as resolution increases).

In other words, considering that there are diminishing returns at each jump in resolution (320x200 or less --> SD --> 2K--> 4K --> 8K), I am seriously questioning the value that 8K rendering and/or display will bring to me and others at normal monitor or TV viewing distances, versus the computational/financial/environmental cost .

I am not against progress, but I feel this is starting to look like the, IMHO largely pointless, megapixel race in the digital camera world . There is more to digital camera image quality than megapixels (dynamic range, noise performance, autofocus performance and accuracy, etc) and there is more to video game quality than rendering/display resolution (display and processing pipeline color depth, light/shadow rendering algorithms, texture resolution, frame rate, 3D model detail, etc ).

EDIT: Is increasing resolution beyond 4K the most "bang for the buck" path towards a better visual quality gaming experience ?