ZellSF wrote on 2021-06-30, 22:59:

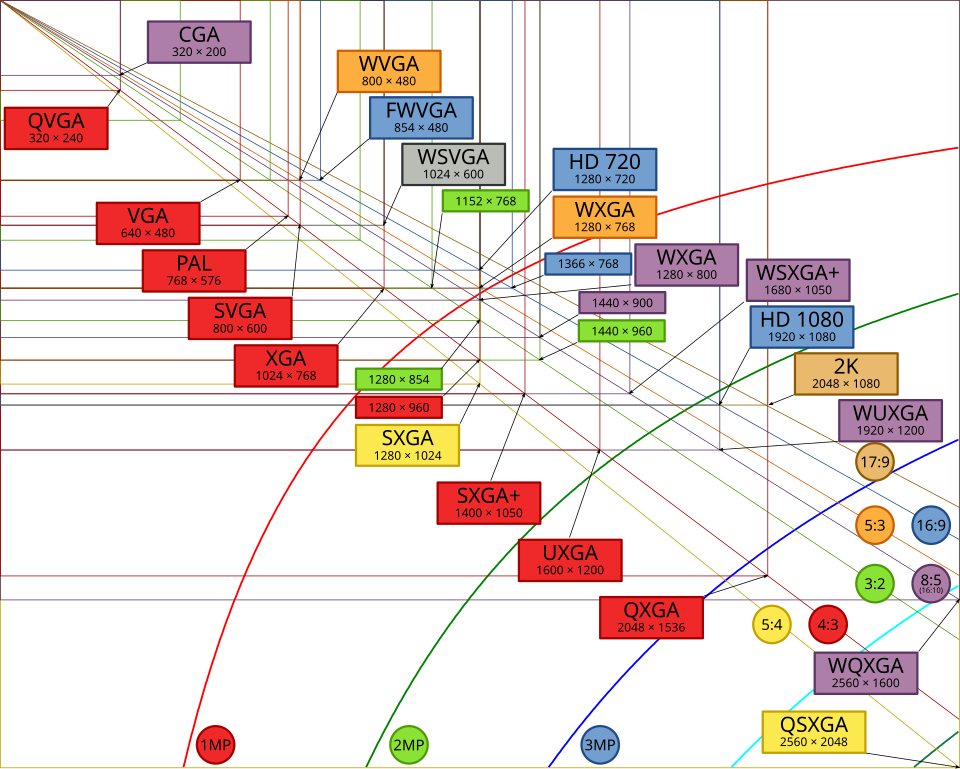

Not that I understand why 1280x1024 was a thing in the first place.

Historically The only reason for a variety of resolutions had to do with memory use or timing limits

640x200 perfectly fits in 16k

Same for Hercules and the Japanese 640x400

Even the 8 bit oddities matched memory layout.

Even Suns high end 1024x1024 display was 100% to fit in memory

640 wide only exists because 80 columns was a thing for some reason matching old 7 pin printers

Once IBM releases the (150k) 640x480 that breaks any relationship between efficiency and the frame buffer and makes absolutely no technical sense as it wastes almost half of the frame buffer. One could argue it was designed for 320x200 to have pages but that’s an afterthought since most spent their time in 640x480.

IBM never mentioned 4:3 aspect until 5 years after vga was out so it likely was just to match newer screens capabilities and retain the 640/80column relationship.

The “SVGA” resolutions actually followed what was being done years earlier with fixed frequency displays 1024x??? Was popular since it fits common memory sizes

AKA 1024x768 fits into 384kb, 768kb or ever 2.25mb (yes I have a 2,25mb video card)

800x560 in fixed frequency land became 800x600 another non-compliant res but actually after 1024x768

1280x1024 fits in 640kb in fixed frequency land which then transferred to PC

1280x960 existed as well but was not popular since it wastes more memory for no gain, many in the industry didn’t care about aspect ratio especially on dedicated applications where you needed a certain amount of real estate.

1600x1280 was a popular fixed frequency monochrome screen resolution because it fit in the buffer better than 1600x1200. I say this because I owned a Cornerstone mono screen from a bank that ran 1280 instead of 1200

AKA Back in the day you either needed color at potato resolution or you needed raw resolution in strict b&w

1600x1280 1 bit “color” fits perfectly in 256kb of memory