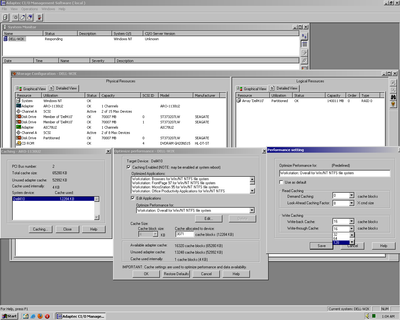

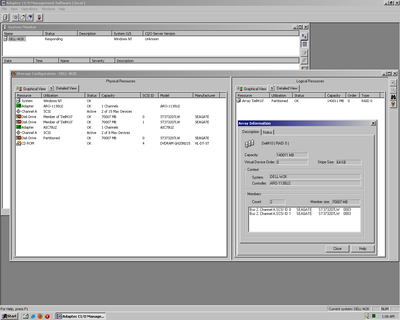

I have made some progress on this effort. It seems like the bootable RAID setup diskette doesn't have all the array configuration features, even if you go to custom build. The Express RAID building option has some settings, but they remain hidden to the user. You get basic options like optimisations for "Database application", "technical/graphics application", and "other". But, it won't tell you what cache settings it is using. As for the block size, it seems that "Database application" uses 64K, while "technical" uses 32K. "Custom" is fixed at 64K.

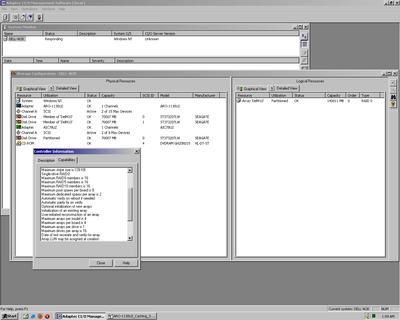

To view the cache settings, one has to install the Adaptec CI/O Management Software. v4.01 is available on the adaptec website: https://storage.microsemi.com/en-us/support/_ … _raid/cio_4.01/

v4.02 can be found from Dell: https://delldriverdownload.info/precision-410 … nt-4-0-drivers/

The Adaptec websites mentions a version 4.03, but when trying to download, it says file not found.

https://storage.microsemi.com/en-us/support/_ … _raid/cio_4.03/

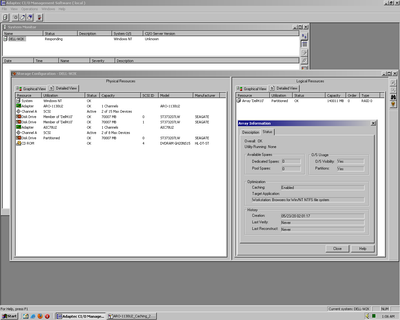

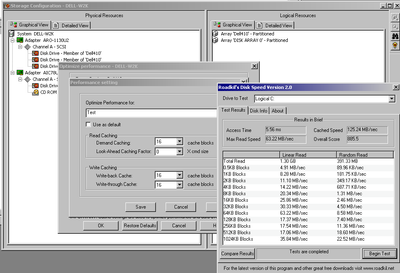

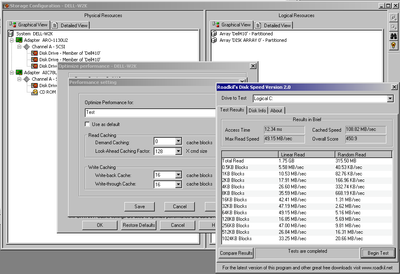

Here are some of the visible options in the software. It works in NT4, W2K, and XP. It supposedly works in Win9x too, but there aren't any ARO-1130U2 RAID drivers for Win9x.

The default cache size per array is 12284 KB. This can be increased to 64 MB minus 4 KB.

There are various pre-set cache optimisations, for example, "Browsers for Win/NT NTFS file system", etc. All this does is change the number of cache blocks for:

Read Caching:Demand Caching

Read Caching: Look-Ahead Caching Factor

Write Caching: Write-back cache

write Caching: Write-through cache

Each cache block is 4 KB.

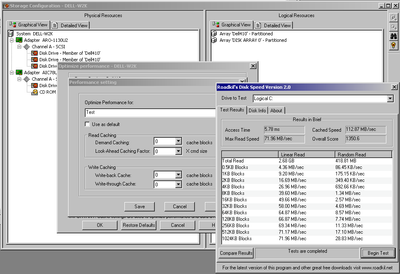

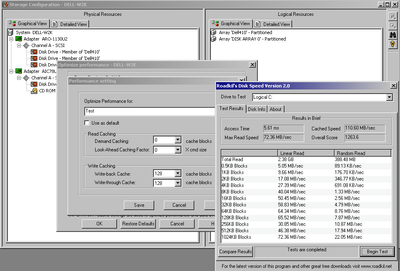

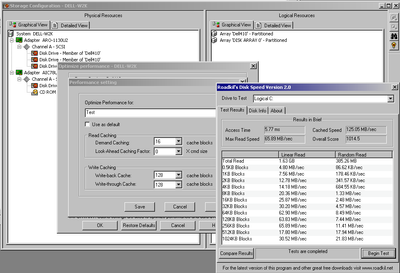

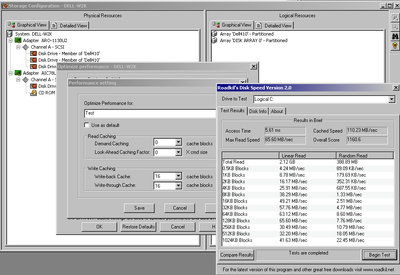

I have run various benchmarks with different values and it seems that having any value in the "Look-Ahead Caching Factor" decreases performance. In fact, the best performance is with all cache disabled (all set to 0's). When cache is disabled, the benchmark performance of the RAID array matches that of a basic disk on the 2940U2W controller. I guess the cache on the HDD is too fast for cache on a RAID card to make any difference, and in this case, it hurts perf.

Here's the benchmarks using Roadkil with the cache disabled:

Plan your life wisely, you'll be dead before you know it.