First post, by xplus93

- Rank

- Oldbie

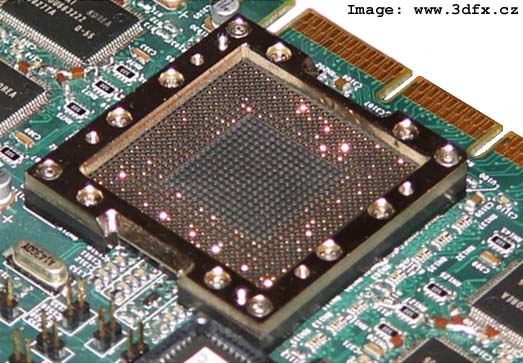

Seriously? Why not? Having GPU's socketed and handle more like processors or FPU's of old would be a genius concept. Easier to mount larger, quieter heatsinks and custom cooling solutions. Easy to have cheaply upgrade-able ram. If intel can have the GPU on-chip the why can't we have socketed GPUs?

The issues of bandwidth have been resolved since that's the only thing intel has focused on for the past 3-4 years. Even they know CPU's have reached the practical limits of usable speed(without counting in cryptography/security). So we should easily be able to develop a modern local bus (Yeah, I know it's called QPI) that can be dedicating to "visual processing". The only downside I see is a limit to the amount of sockets on a board, but that isn't too dissimilar to the amount of x16 slots a motherboard provides.

Also imagine the new form factors that could be developed. A liquid cooled 6 socket (2x CPU 4x GPU) could be a really thin/flat system. Not to mention if you mount the board upside-down you remove the biggest issue in liquid cooling (leakage).

Imagine a 4-5 inch thick slab sitting on your desk with the board mounted in the middle, radiators on either side and an ultra-wide screen sitting/mounted above it. Then imagine that slab having the power of a full ATX system that sounds like a jet engine.

(God-damnit, I sound like Steve Jobs. Don't I? Well, the man had at least a few good ideas)

XPS 466V|486-DX2|64MB|#9 GXE 1MB|SB32 PnP

Presario 4814|PMMX-233|128MB|Trio64

XPS R450|PII-450|384MB|TNT2 Pro| TB Montego

XPS B1000r|PIII-1GHz|512MB|GF2 PRO 64MB|SB Live!

XPS Gen2|P4 EE 3.4|2GB|GF 6800 GT OC|Audigy 2