Logistics wrote on 2020-05-21, 04:58:

The review I saw had a 5800U running over AGP 8x. Is it your motherboard or the 1000 that wants to run at 4x?

Hi, thanks. I have it running at AGP 8x and SBA is enabled. I must stress that I'm trying to mod this into a 5800, non-ultra.

According to an old online article, K4N26323AE-GC1K is 1100MHz capable. The only extant datasheet I can find for this chip states the following:

K4N26323AE-GC20 - 500MHz

K4N26323AE-GC22 - 450MHz

K4N26323AE-GC25 - 400MHz

...which is 1000, 900, and 800 compared to the GC1K part on the Quadro FX1000. I'm almost certain my card isn't faulty, because I have a few of them and they all exhibit the same exact behavior. When raising the memory frequency above a certain point, it crashes the screen with artifacts.

The OP mentioned tinkering with voltages and frequencies in Nibitor. But I find the program very confusing. I'm attaching both my modded and strapped Quadro FX1000 BIOS as well as the 5800 Abit Siluro bios, and I'd appreicate it if someone could make heads or tails of the differences in them for me. Maybe the issue is that a hardware voltage mod is necessary. Again, if someone could shed some light on the matter, it would be appreciated.

EDIT: Here's another oddity. I mentioned that the clock wasn't ramping up when a 3D program was running... But I do know now that that's not driver related. For one, there are 5800 benchmarks posted on this forum with driver 45.23. Second, when I set the 2D clock to 500/800 (I'm only setting the RAM to 800 because I'm not sure how much past 800 it can go without crashing before figuring that problem out), GPU-Z will show that the clock is set successfully to the new values, but as soon as I launch a windowed 3D app, the clock drops down to 300/300, no matter what the clock setting for 3D is.

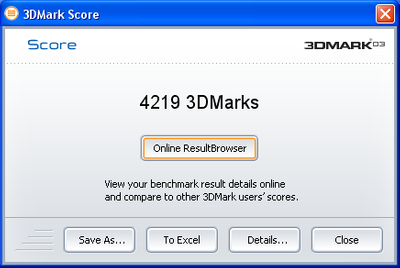

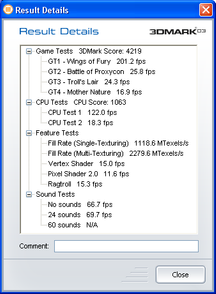

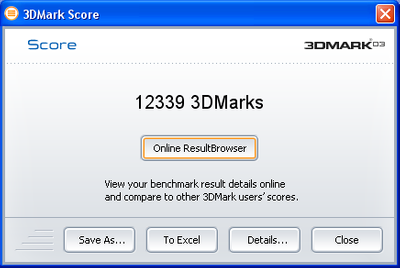

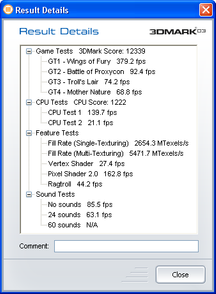

EDIT 2: Nevermind, this had to do with bypassing nVidia's test check before allowing those clocks, eventhough I had it disabled in the registry. Time to run 3Dmark 2003 again to see the new score.

EDIT 3: Something's not right. GPU-Z is logging shifts to 300/300 while 3DMark is running. I will try to modify the BIOS to 500/800 manually with nibitor. My only concern is the lack of a 1.4V option in the Quadro BIOS.

EDIT 4: So modding the BIOS to 500/800 _and_ using overclocking with coolbits kept it at 500/800 during 3DMark 2003. But I experienced instability. The system crashed during the test. My PSU is adequate and has fresh Japanese caps, but maybe it's a lousy design. So I'll try something else to rule it out, and then I'll start dropping the core speed to see if that's the problem (which would confirm that the 1.4V setting which is absent in the FX1000 BIOS is necessary for 500MHz).