First post, by keenmaster486

- Rank

- l33t

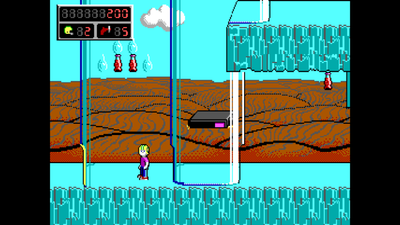

Please view the attached image to get an idea of what I am talking about. I captured that screenshot just now from DOSBox on my 1440p LCD monitor. It looks crisp and pleasant to the eye, no blur. The aspect ratio is displayed correctly rather than being stretched to the screen size. View it on a 1440p monitor at fullscreen, or zoom in to see what I mean.

I wish I could display this type of image from a DOS computer VGA source to the same monitor rather than having to use emulation.

Obviously everyone knows of the problems displaying low resolutions on LCDs, that they are basically unusable due to the blur introduced by scaling algorithms.

But with higher resolution displays such as 1440p or 4K, integer scaling actually looks good as each "pixel" is extremely close in size to the next compared to the odd ratios you would get with 1024x768 for example.

Is there a solution for this? Everywhere I look it seems like basically the same problems that were introduced by shortsighted engineers in the early 2000s have persisted across the entire LCD industry this whole time.

My new 1440p monitor does have an option to display the correct aspect ratio rather than stretching, which is nice. But it still displays the picture as blurry as possible, which could only have been programmed in there on purpose, presumably for no other reason than just to torture people who wish to display resolutions lower than native on this monitor, given that even 640x480 looks very nice, no glitchy pixels, with integer scaling as demonstrated in DOSBox.

Any monitors that don't do this? Scalers I can place in between the source and monitor that will accomplish what I am looking for? I know scalers exist for retro consoles, but haven't seen any for VGA that claim to do this.

World's foremost 486 enjoyer.