First post, by Babasha

- Rank

- Oldbie

Functional test of Matrox video cards to remind myself (and others) about the specifics and quality of their work. The goal of the test was not to squeeze the max. frames or consider non-standard solutions (such as OpenGL - D3D wrappers), but just go on standard drivers and settings under Win98.

1) Compaq's Matrox Mystique PCI 4MB is one of the company's first attempt in the growing 3D graphics market. A breakthrough in speed, but not always in capabilities relative to competitors in the low-end sector (such as S3 Virge). The speed and 3d functions/games are 2-3 times higher, as is the implementation of many acceleration functions. A huge flaw is missing bilinear filtering (however, if someone likes a good framerates, but pixels a la Playstation 1, there is a charm).

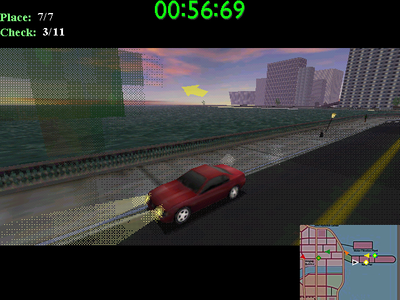

2) Matrox G100 AGP 4MB - this is a variant of "Mystique as it should be." Bilinear filtering has appeared, but the work with alpha blending (transparency of textures) is very strange - it is implemented as a pixel-blank grid. But again at some points it looks very old-school. The games Tombraider 2 and Turok are already quite comparable to ATI Rage Pro in framerate and quality, are interesting picture. The card does NOT support OpenGL (at least without third party wrappers).

3) Matrox G200 AGP 8MB - the first flawless 3D accelerator from Matrox. Visually and in terms of speed, it can compete with Riva TNT, much more interesting than Riva128 and Voodoo 1 (for my taste, at least). There is support for OpenGL without multitexturing and squeezes out its 40 frames per second even on my modest Cel300A.

4) Matrox G450 AGP 32MB - is a best company's cards in terms of quality and even an attempt to become a "trendmaker" with proprietary EMBM technology. Competitor of Riva TNT2. High-quality implementation of 3D functions and more than good OpenGL already with multitexturing.

PS. A separate remark to the supporters of “bullsh@t is your Matrox” - no, it’s not bullsh@t, the company had its own working concept for using these products, which allowed it to outlive many competitors and stay alive until now. Videocards were focused primarily on the multimedia VIDEO segment - work with daughter cards or integrated TV tuners and video capture, image output to TV. Further, excellent quality in 2D, support for legacy systems and alternative operating systems from DOS (Autocad, etc.), Win 3.1 and older and up to Windows XP, workstations and medicine are those areas where Matrox has been and remains traditionally strong.

PSS. Those who wish to discuss/participate are invited to the this thread of the forum . I can also post there (on request) pictures and compare card in more details.

Need help? Begin with photo and model of your hardware 😉