Scali wrote:archsan wrote:

I have no idea what Octane even is, or what it's supposed to do... but if it doesn't work, they're doing something wrong. Pascal does not require specific modifications before a CUDA application can run.

The mumbo jumbo is that it's an "unbiased, physically-based renderer"... takes a sec to google/bing image search them to see what it's like I guess. It's similar to iRay for 3dsmax.

And is it so strange that adding full support for new gen hardware to a piece of production-grade software (I don't know how large/small their team is) would take some time? They've just released a major new version, and it seems that the CUDA 8 toolkit is not even a final release yet.

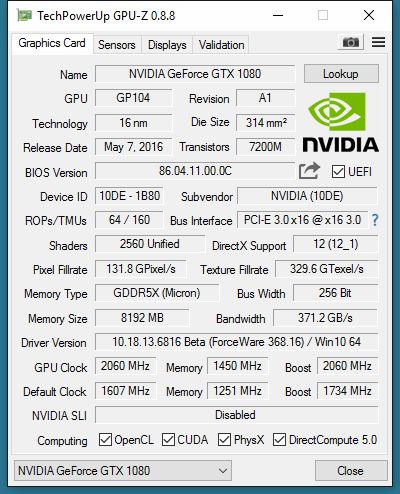

Here are some CUDA benchmarks, and the 1080 does great in them: http://www.phoronix.com/scan.php?page=article … 1080-cuda&num=2

Thanks for that link, the clock boost does help greatly with the 1080 I see. Now, 1070 vs 980 Ti on CUDA: http://www.phoronix.com/scan.php?page=article … -gtx-1070&num=4

Between them, it's not clear-cut yet, which card does better on which application, and it's not like I wanted the Pascal specimen to perform worse, even if it's a scaled-down version. If 1070 beats 980Ti on OR3 straight away, all the better... since 8GB is always nicer than 6GB, 150W is always better than 250W etc etc.

spiroyster wrote:

What the dev is saying there is that they tried to implement a CUDA 8 driver, which hasn't gone too well. They seem to think the problem is with with the cards' implementation (nvidias problem) hence bouncing the problem back to them ("conversations with nvidia").

I suspect this means you can get the new 1070/1080 and still use Octane (it may be faster than previous iteration anyway being the next generation of card), it just won't be using CUDA 8. Whether or not this api/sdk is better than the previous is anyones guess (abstrax seems to think its not).

A couple of members there reported that they can't use their 1080s yet. It will just take a little bit more time until it's officially supported, I'm sure, and then it's happily ever after. It's happened before with new gen cards, no biggie.

Alright, it's going to minute off-topic details already. 😜 Let's get back talking about AMD's demis--um, comeback.

"Any sufficiently advanced technology is indistinguishable from magic."—Arthur C. Clarke

"No way. Installing the drivers on these things always gives me a headache."—Guybrush Threepwood (on cutting-edge voodoo technology)