Reply 340 of 434, by kant explain

Don't forget the 80186 accelerator card/s.

Don't forget the 80186 accelerator card/s.

kant explain wrote on 2023-12-01, 10:35:Don't forget the 80186 accelerator card/s.

Okay, let's don't forget them then. 🙂👍

What I find interesting about the 6510, the 6502 variant used in C64, is that it's tristate/three-state capable.

So it can be wired in parallel to another CPU, in theory.

"The primary change from the 6502 is the addition of an 8-bit general purpose I/O port, although 6 I/O pins are available in the most common version of the 6510.

In addition, the address bus can be made tristate and the CPU can be halted cleanly. "

Source: https://en.wikipedia.org/wiki/MOS_Technology_6510

If the 65C802 had same tristate feature, it would simplify the design of a 6510+65802 daughter card that mounts on a 6510 socket. ^^

Edit: What makes me also wonder is, as to why the existing SuperCPU was made the way it was.

It would have been much more elegant to design the SuperCPU as a pedestal w/ ribbon cable.

That way, a large motherboard and a built-in PSU could have been fitting inside.

Just like it was done with the SatellaView for the Super Famicom or the hard disk (Megafile) for the Atari Mega ST.

Pictures:

Source: https://commons.m.wikimedia.org/wiki/File:Sat … per_Famicom.jpg

Source: https://commons.m.wikimedia.org/wiki/File:Ata … i_Mega_ST_1.jpg

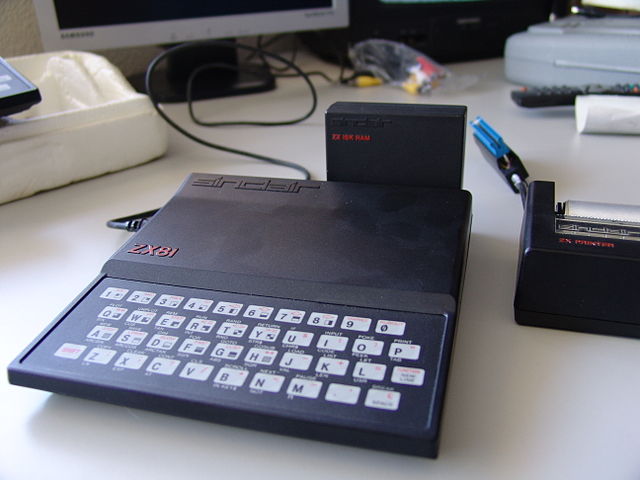

Anyway, it just came to mind because of the ZX81 memory pack.

It installed in the module port, just like the SuperCPU.

That caused a lot of reliability problems due to shaky connections.

Edit: SuperCPU vs ZX81 RAM pack

Source: https://www.c64-wiki.de/wiki/SuperCPU

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

I guess most things have been said already, but I've just found an interesting YouTube video from the early 80s.

https://www.youtube.com/watch?v=ZMVedYif3wM

It shows the different categories of computers, C64, IBM PC, Apple, ZX80 etc.

Interestingly, it essentially says that the IBM PC is not for the hobbyist, because it's meant to use commercial, ready-made software.

Which isn't completely true, given that the IBM PC 5150 had characteristics of a home computer, as well. Datassette port, ROM BASIC..

Public Domain software (PD) was strong early on on the platform, too, as far as I know.

Anyway, these were the 80s, when professor and teacher like individuals were computer experts on TV. 😉

PS: The video has the robot arms, too.

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

You could make the argument that those home-ish computer features were there for use in the lab/scientific endeavor. Did you mention built in basic? The ibm was too expensive for most hobbyists. Who knows what theynwere thinking.

kant explain wrote on 2023-12-22, 02:22:You could make the argument that those home-ish computer features were there for use in the lab/scientific endeavor. Did you mention built in basic? The ibm was too expensive for most hobbyists. Who knows what theynwere thinking.

Do you think there'd be many uses for an 80s pc for scientific computing? I'm not old enough to know what was the norm back then, but my impression is that most scientific computing was done on mainframes. I'm just trying to think of use cases.

Many Apple IIs were found in lab environments. Trs80s also. Mainframes were for business primarily. Sure they teached me FORTRAN om an IBM mini. But that was all they had.

kant explain wrote on 2023-12-22, 14:40:Many Apple IIs were found in lab environments. Trs80s also. Mainframes were for business primarily. Sure they teached me FORTRAN om an IBM mini. But that was all they had.

Cool! Do you know what exactly they were used for in the lab? Were they just used for similar things that they would be used for in a business, such as general management tasks, spreadsheets, writing reports, etc. I'm more interested in the actual science. Like, would an IBM PC be hooked up to any of the lab hardware or could any of the actual science be done on them.

I'm not trying to argue or anything like that. I'm a computational biologist with an interest in retro computing, so I'm naturally curious about this stuff. I couldn't imagine doing any computational biology on an 80s pc due to the memory and speed limitations. I guess a pc could probably have done simple statistics and the like, but most tasks would require far more computing power. I'd assume that they would need a mainframe for most of the actual scientific computing. That seems to be one reason why Fortran is still used today. A lot of this work goes back decades to when they were using Fortran. It still gets the job done today.

It would be best to consult old books and articles. With appropriate search terms.

Btw, just seen another love letter at golem.de, a German news site.

It somewhat praises the C64 and its designers (dated 23 Dec 2023).

It can be read here: https://www.golem.de/news/commodore-64-1982-d … 312-180425.html

In the comments, somewhone wrote "the mistakes of the C64".

Interestingly, it addresses similar things I mentioned before.

Here's the translation:

"The more interesting question is why Commodore was never able to build on the success of the C64.

Clearly the floppy catastrophe - which was not caused by missing conductor tracks but by a broken hardware shift register - was one reason why important software was simply not developed for the 64.

Then there was the lousy housing that was completely unsuitable for the important US education market and made expansions massively difficult.

This also meant that C64 programs could only assume what was installed in the series C64. In comparison to the Apple 2, where you were almost forced to replace the 6502 with a 65C02, which worked without any problems.

The graphics were missing the important 80-character mode that was soon included as standard with the competition.

Which was trivial thanks to the Apple II's superior open architecture.

As a result, the C64 was ultimately not suitable for professional use.

Of course it could have been something in terms of sound, but the hardware wasn't so good that it could have been dangerous for special music devices.

Furthermore, compared to the competition, the documentation is terrible and the developer support is significantly worse.

In the end, the C64 was just a games console with benefits that disappeared from the market without much fanfare when technically better systems became available.

This was ultimately catastrophic for the manufacturer because the majority of customers equated Commodore with being unsuitable for professional use."

Source: https://forum.golem.de/kommentare/sonstiges/c … 03100,read.html

So I wasn't the only one feeling that way.

That's kind of reassuring.

That being said, maybe it's not a bad thing.

It's a miracle kind of that the C64 was successful against all the oddities.

Again, it's the community that matters. A platform rises and falls with its community.

And the C64 (and subsequently, Commodore) was lucky to have so many fans.

Edit: Another user/commenter has hinted why Z-80 systems were less popular than 6502 systems (among home users).

"Maybe you should see the whole thing more globally. CP/M and the Z80 were actually very successful.

Microsoft, for example, owes its existence to the CP/M card for the Apple II.

This was only irrelevant in the children's room market - Commodore and Atari. In return, these companies were pretty irrelevant outside of the children's room.

Yes, even MOS screwed up and had to watch as the Western Design Center dictated the line. "

Source: https://forum.golem.de/kommentare/sonstiges/c … 03133,read.html

That sounds plausible to me. Apple II was indeed a platform often used im computer labs.

It also had numerous clones.

The C64, by contrast, was a proprietary game computer that was popular in the bed room.

So the emotional bond was greater here, too.

These types of users also had less of a need for CP/M and productivity software.

Aside from running Turbo Pascal for school work (the Z80 SoftCard for Apple II could do that).

Edit: The C64 had a CP/M cartridge, too, but it was very slow and unstable.

It is said to work only in early C64 revisions, because there's a flaw in the power distribution.

Maybe the C64 PSU or the C64 provide insufficient power to the module.

Anyway, no big deal. It's just another little bug.

https://virtuallyfun.com/2018/07/27/running-c … e-commodore-64/

https://www.reddit.com/r/vintagecomputing/com … e_of_the_worst/

That being said , the "Soft 80" utility is very interesting.

It emulates an 80 char mode in software. ^^

Edited.

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

I guess there are two separate worlds here...

If you go by the 'official' story of scientific computing, then there were big budgets, and large, expensive, capable machines were marketed towards this market.

At the same time, the world of scientific computing didn't operate in a vacuum. So there were people who had a home/personal computer at home, and were developing hardware and software on that.

And they may also take that into the lab, to rig up some solution with hardware that was not specifically marketed to that task, but could be made to do it regardless. Of course they wouldn't be capable of number crunching at a large scale, but there were other tasks they could do, like collecting and plotting data, or driving robot arms or other kinds of manufacturing machines, or other kinds of controllers.

Jo22 wrote on 2023-12-23, 14:50:Clearly the floppy catastrophe - which was not caused by missing conductor tracks but by a broken hardware shift register - was one reason why important software was simply not developed for the 64.

Which is false, because that problem was adequately handled in software.

Jo22 wrote on 2023-12-23, 14:50:Then there was the lousy housing that was completely unsuitable for the important US education market and made expansions massively difficult.

Commodore knew this, and developed the "Educator 64", which was essentially a C64 in the more robust casing of a PET.

https://en.wikipedia.org/wiki/Commodore_Educator_64

So again, that argument is false.

The 80-column argument is one that is already dealt with in the German video you posted:

If you want readable 80-column text, you need a custom screen with higher resolution than what the regular TV standard of the time was capable of.

It would also require a more expensive video circuit with more memory to drive it.

It would put the machine into an entirely different price range.

So the argument is flawed to begin with. The C64 was supposed to be an affordable home computer. Like all home computers of the time (and even the original IBM PC with CGA, or the PCjr, they specifically have a 40 column mode for composite displays, the 80 column mode is only usable with an RGBI TTL monitor, which IBM didn't even have on the market until years later), that meant they had to work with regular TV standards, so they could either be used with a TV set that people already had in the house, or on a commodity monitor that was based on the TV standard, which were commonly used in studio setups or closed-circuit setups for security cameras and whatnot.

It's always funny how these people do not mention any of the downsides of these early 80-column displays: they were usually text-only and monochrome, and with the worst ghosting ever, making them completely unusable for any kind of animation.

Having 40 column colour and graphics on a regular TV, which had no problems with animation is really a much better compromise than IBMs with MDA (or even Hercules) at the time.

And what to think of the laughable solution that the Atari ST offered: you could have regular PAL/NTSC-capable medium resolution colour graphics... Or you could have high resolution monochrome, for which you required another monitor.

And the monitor was not compatible with the medium resolution colour at all, so you had to plug your screens around all the time.

That makes sense. It's just..

The Apple II platform was based on a 6502, as well, despite being used in a professional environment.

The Apple II, despite being seen as a home computer, is very much lile the professional IBM PC in several ways.

It was expandable, had internal slots and an open design based on off-the-shelf parts.

The IBM PC bus was being inspired by the Apple II edge connectors/slots, as well.

And both Apple II and IBM PC have very good documentation, way down to the schematics.

(That was "normal" 1970s practice strictly speaking. Lots of 70s products had complete documentation.)

So it's kind of interesting that the Z80 SoftCard by Microsoft almost was a standard component of an Apple II at the time.

Even Apple II emulators do care about the SoftCard, not seldomly because a lot of CP/M 80 programs are stored om Apple II disks.

Thinking about this is very fascinating, I think.

The Apple II was like the C64 of schools, so to say.

Until the Apple II GS and/or Macintosh hit the scene, maybe.

Here in Germany, the Apple II was kind of well known before the C64, too.

Especially the open design was welcome, I think.

It allowed designing custom interface cards.

The IBM PC (and its clones) later took this role, I sure.

After the C64 was released, however, the Apple II largely vanished into obscurity.

That's how it looks, at least, if I read through old computer magazines.

In the late 70s, however, it was still being widely advertised in ham/computer mags.

Edit: The Educator 64/PET 64 was a response by Commodore to fix the issue, but failed to catch on.

The floppy drive is broken in multiple ways, the shift register is just one problem.

Edit: Funny. The Atari ST's hi-res mode was among the most sane things from that era, I think.

That 640x400 mode was similar to AT&T/Olivetti "Super CGA" in terms of fidelity.

It was fine for real work. It should have been part of IBM's CGA from the very start.

And it's possible to use low/medium too resolution on a hi-res monitor.

There was a hack publushed, it involved a switch too (necessary, because the video cable has a sense pin for the hi-res monitor).

Edit: Small edits, I'm on a poor smartphone and typing is difficult here.

Edit: 80 char mode is only a problem for lo-fi colour displays.

Most b/w monitors could do it just fine. No need for a special "data display".

A humble b/w studio monitor from the 1960s would do, as well.

In the 70s, electronic hobbyists hacked their b/w portable TVs to be video monitors.

That's the same folks who were around in the Apple II era.

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

Jo22 wrote on 2023-12-23, 15:30:That makes sense. It's just..

The Apple II platform was based on a 6502, as well, despite being used in a professional environment.The Apple II, despite being seen as a home computer, is very much lile the professional IBM PC in several ways.

The Apple II was just one of the first of its kind. It inspired many followers, including the C64 and the IBM PC.

It's just that Commodore took it into one direction (take the 6502 and shared memory video circuit, and improve the design and cut costs), where IBM took it into another (more expandability, use a better CPU than the limited 6502 to break the 64k barrier).

I don't think the Apple II was specifically designed to be used in a professional environment, it just happened to be one of only three choice on the market, the other two being the PET and the TRS-80 (and shortly thereafter the Atari 8-bit line).

And of those limited choices, it apparently was the best one.

Jo22 wrote on 2023-12-23, 15:30:The Apple II was like the C64 of schools, so to say.

Not here.

I've never actually seen an Apple II back in the day.

We had C64s at school (real C64s, not Educator 64s, so that argument that they were 'unsuitable' doesn't really hold either), and later moved to XT clones.

Jo22 wrote on 2023-12-23, 15:30:Here in Germany, the Apple II was kind of well known before the C64, too.

Especially the open design was welcome, I think.

It allowed designing custom interface cards.

As did the C64. My dad actually did that. Our first cartridge was a tape turbo cartridge that he and some colleagues at work had made.

The C64 had both the cartridge port and the user port, which could both be used for all kinds of things.

The cartridge port is very similar to the Apple II and PC expansion buses. Although there is only one physical connector at the back of the machine, you can break-out the bus into multiple slots.

There are adapters available for that.

Jo22 wrote on 2023-12-23, 15:30:After the C64 was released, however, the Apple II largely vanished into obscurity.

That's how it looks, at least, if I read through old computer magazines.

In the late 70s,however, it was still being widely advertised in ham/computer mags.

As I said earlier: the C64 completely rewrote the rules on what a computer should cost, and what it is capable of.

Apple II seems to have gotten a small following in the early years, but its prices never dropped when C64 and other cheap alternatives arrived in the early 80s, making the Apple II a niche product, probably only interesting to people who already had an Apple II and were looking for an updated machine.

It was more in the pricerange of the IBM PC than the C64, and the PC would be more powerful and capable than the Apple II, with its more capable 8088 CPU, its larger case, more powerful PSUs and overall more 'industrial' design. And then there was the booming software market for MS-DOS.

So while technically the Apple II may have been closer to the C64 than to the PC (although the C64 easily outperforms the Apple II in terms of graphics and sound), price was an entirely different matter.

The Apple II could neither compete on price with the C64 nor on technology with the IBM PC.

Scali wrote on 2023-12-23, 15:19:And what to think of the laughable solution that the Atari ST offered: you could have regular PAL/NTSC-capable medium resolution colour graphics... Or you could have high resolution monochrome, for which you required another monitor.

And the monitor was not compatible with the medium resolution colour at all, so you had to plug your screens around all the time.

Ok ... taking deep breaths, counting backwards. The Atari ST was an absolute GEM of a computer (no pun intended). If offered everybody something. Gorgeous high res text for those that needed it. Pretty nice color graphics and medium res text. One could wonder why 1 monitor that did both wasn't offered. Probably because of the expense. A monitor like that in 1985 could itself cost a grand in USD.

The Atari ST was everything a Mac 128/512/Plus could only dream of being. For 40% the cost. The ST still sorta kinda had that console feel. The keyboard was pretty crappy admittedly. If Atari had only created something more professional looking ... so plastic-y. But it really offered so much for the price.

The IBM 5151 monitor is much revered by people today (being that CGA is so limiting). The ghosting was on account of the p39 phosphors, to compensate for the 50hz refresh. Everyone loved that schnitzel. There were no perfect solutions anywhere in those days. What is this thread about? OHHHHH the C64. Was that perfect? I'm afraid to ask!

Scali wrote on 2023-12-23, 15:48:As I said earlier: the C64 completely rewrote the rules on what a computer should cost, and what it is capable of.

[..]

I absolutely agree, the evidences are everywhere.

And that kind of depresses me. 😔

Because, it's like with cheap airlines these days, I think.

Or with the discounters (P*nny, Ald* etc).

The hard, never ending price competition results in a drop in quality.

But that's another story, I suppose.

Edit: What did set the Apple II apart was it's design, also, I think.

Because, it's not only a random console chassis.

No, it has the form factor of a typewriter.

That's a very important detail, I think.

If you look at an Apple II, you see a piece of equipment.

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

kant explain wrote on 2023-12-23, 15:54:Ok ... taking deep breaths, counting backwards. The Atari ST was an absolute GEM of a computer (no pun intended).

The Atari ST was a last-minute pre-emptive strike by Atari to try and release a 16-bit machine before Commodore got their Amiga on the market.

And it shows.

The strategy worked however, at least initially.

The Atari ST already had some software on the market by the time the Amiga was launched. As a result, a lot of early software for Amiga were poor ports from the Atari ST, not making use of the Amiga's unique capabilities. To add insult to injury, the Atari ST ran its 68000 at 8 MHz, where the Amiga ran it at 7.xx MHz (frequency dependent on the video output, as the system was still synchronized to the video output), so the Atari ST was slightly faster with the same software in many cases.

kant explain wrote on 2023-12-23, 15:54:One could wonder why 1 monitor that did both wasn't offered. Probably because of the expense. A monitor like that in 1985 could itself cost a grand in USD.

I doubt it. It's not that difficult to get a monitor to display multiple resolutions. IBM did that for both their EGA and VGA standards.

I think the limitation in the Atari ST is in the hardware. Probably a result of rushing it to market.

EGA selects between medres and hires by inverting the sync signal polarity. That makes it trivial to detect the desired resolution at the monitor side, without requiring the fancy multiscan/multisync monitors that we would later get in the SVGA era.

Not sure why Atari couldn't have done that as well.

Jo22 wrote on 2023-12-23, 15:57:Edit: What did set the Apple II apart was it's design, also, I think. Because, it's not only a random console chassis. No, it ha […]

Edit: What did set the Apple II apart was it's design, also, I think.

Because, it's not only a random console chassis.

No, it has the form factor of a typewriter.

That's a very important detail, I think.

If you look at an Apple II, you see a piece of equipment.

I see an ergonomic nightmare.

Scali wrote on 2023-12-23, 15:19:It's always funny how these people do not mention any of the downsides of these early 80-column displays: they were usually text-only and monochrome, and with the worst ghosting ever, making them completely unusable for any kind of animation.

Having 40 column colour and graphics on a regular TV, which had no problems with animation is really a much better compromise than IBMs with MDA (or even Hercules) at the time.

It's simple, really. 80x24 was terminal standard on mainframes, on terminals at the airport etc.

The 25th line was status line (time, connected, baud rate settings).

CP/M programs or *nix programs assumed 80 characters per line, too.

80 characters also allowed typing a letter.

So any word processor could make good use of it.

But that was the minimum, really. Ideal were 120 or so.

Programming makes more fun at 80s xhars, too.

You're not constantly have line breaks.

But again, 80 chars aren't the end of the road here, either.

DOS programs from the 80s supported 132 chars (WordStar, Lotus 123 etc) if an appropriate video adapter was installed.

Many Super EGA/VGA cards had hi-res text modes that could be selected via mode utility.

But even before that, special boards existed.

Edit: Again, the C64 has the ancient "Soft 80" utility.

It can do simulate 80 character mode surprisingly well.

I think it kind of saved the day multiple days back then.

It can also be used in Basic, I believe, to make programming easier. Cool stuff.

Scali wrote on 2023-12-23, 15:48:The Apple II could neither compete on price with the C64 nor on technology with the IBM PC.

The original perhaps not, but there were countless clones and kits. Like with the ZX Spectrum, maybe.

Bondwell comes to mind, for example.

"Time, it seems, doesn't flow. For some it's fast, for some it's slow.

In what to one race is no time at all, another race can rise and fall..." - The Minstrel

//My video channel//

Scali wrote on 2023-12-23, 16:03:The Atari ST was a last-minute pre-emptive strike by Atari to try and release a 16-bit machine before Commodore got their Amiga […]

kant explain wrote on 2023-12-23, 15:54:Ok ... taking deep breaths, counting backwards. The Atari ST was an absolute GEM of a computer (no pun intended).

The Atari ST was a last-minute pre-emptive strike by Atari to try and release a 16-bit machine before Commodore got their Amiga on the market.

And it shows.The strategy worked however, at least initially.

The Atari ST already had some software on the market by the time the Amiga was launched. As a result, a lot of early software for Amiga were poor ports from the Atari ST, not making use of the Amiga's unique capabilities. To add insult to injury, the Atari ST ran its 68000 at 8 MHz, where the Amiga ran it at 7.xx MHz (frequency dependent on the video output, as the system was still synchronized to the video output), so the Atari ST was slightly faster with the same software in many cases.kant explain wrote on 2023-12-23, 15:54:One could wonder why 1 monitor that did both wasn't offered. Probably because of the expense. A monitor like that in 1985 could itself cost a grand in USD.

I doubt it. It's not that difficult to get a monitor to display multiple resolutions. IBM did that for both their EGA and VGA standards.

I think the limitation in the Atari ST is in the hardware. Probably a result of rushing it to market.

The Amiga had the larger following no argument. But it could never be found in a business processing environment due to the ridiculous interlaced graphics. For all the design put into it, very professional packaging, very professional s/w environment, they opted to saddle it with crappy graphics modes. It's a shame really.

The Atari was not exactly what you'd call a professional/business type computer either, needless to say. But it had more features that were appropriate. The Atari had a huge following among music professionals. Was the audio capabilities superior to the Amiga? Don't know really. There must be some reasons though.

Sometimes it just comes down to practicalities. If you have to stare at dookie text all day and wear coke bottle bifocals after 6 months, all the rest of the features mean nothing.

EGA and VGA??? As far as I know those weren't available in 1981. EGA monitors in 1985 were very expensive. I bought an NEC Multisync II in early 1988 and it was 600 usd mail order (heavily discounted) before shipping. The Atari was after all a sort of home computer. Spending who knows what for an all in 1 monitor was not very cost effective. Go look at ads and see how much high res color monitors cost in the mid 80s.

Scali wrote on 2023-12-23, 16:05:Jo22 wrote on 2023-12-23, 15:57:Edit: What did set the Apple II apart was it's design, also, I think. Because, it's not only a random console chassis. No, it ha […]

Edit: What did set the Apple II apart was it's design, also, I think.

Because, it's not only a random console chassis.

No, it has the form factor of a typewriter.

That's a very important detail, I think.

If you look at an Apple II, you see a piece of equipment.I see an ergonomic nightmare.

Comparable to the PETs in appearance. Whatever.

The Apple IIs had expandability the C64 didn't. It was overpriced, but for situations where it was warranted, it was a much superior solution.