First post, by akira77

Could argb16161616 be used instead argb8888/argb2101010 under option Default3DRenderFormat ?

Could argb16161616 be used instead argb8888/argb2101010 under option Default3DRenderFormat ?

It could, but there is a little to consider: the display output format is always Default3DRenderFormat, but for argb16161616 only float is supported (DXGI docs). But on the other side, things internally are rendered into unorm rendertargets (except render targets that are float in the first place), so when argb16161616_unorm is converted to argb16161616_float at image presentation then the precision is effectively lowered from 16 to 10 bit. Of course it could still be better than rendering into 10 bit precision all the time in the first place.

All in all, I think it could only be implemented with this constrain in mind. Would it improve HDR appearance?

Dege wrote on 2023-06-25, 12:48:It could, but there is a little to consider: the display output format is always Default3DRenderFormat, but for argb16161616 only float is supported (DXGI docs). But on the other side, things internally are rendered into unorm rendertargets (except render targets that are float in the first place), so when argb16161616_unorm is converted to argb16161616_float at image presentation then the precision is effectively lowered from 16 to 10 bit. Of course it could still be better than rendering into 10 bit precision all the time in the first place.

All in all, I think it could only be implemented with this constrain in mind. Would it improve HDR appearance?

thanks for the info alot.

It think it does, since there is a change happening if argb16161616 is used. With some games, if I alt+tab out with this set, it will soft lock, prompting task manager force quit.

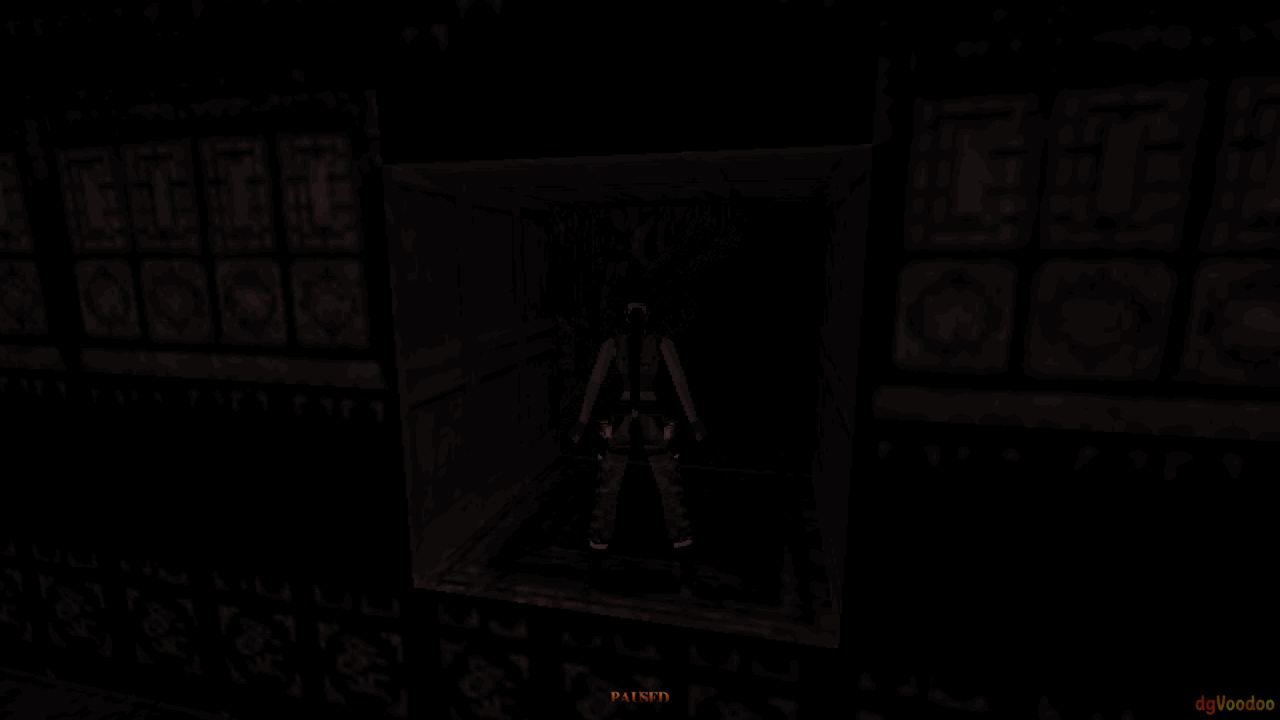

In this case Tomb Raider 3 works OK. I use dgvoodoo (2.8.2) .conf with only the two .dlls: D3DImm.dll and DDraw.dll

dgvoodoo only supports argb16161616_float ? not argb16161616_unorm ?

argb8888 is 32-bit...

that means, argb16161616 is 64-bit?

improving HDR would yes? I use HDR with older Tomb Raider games (Tomb Raider 3).

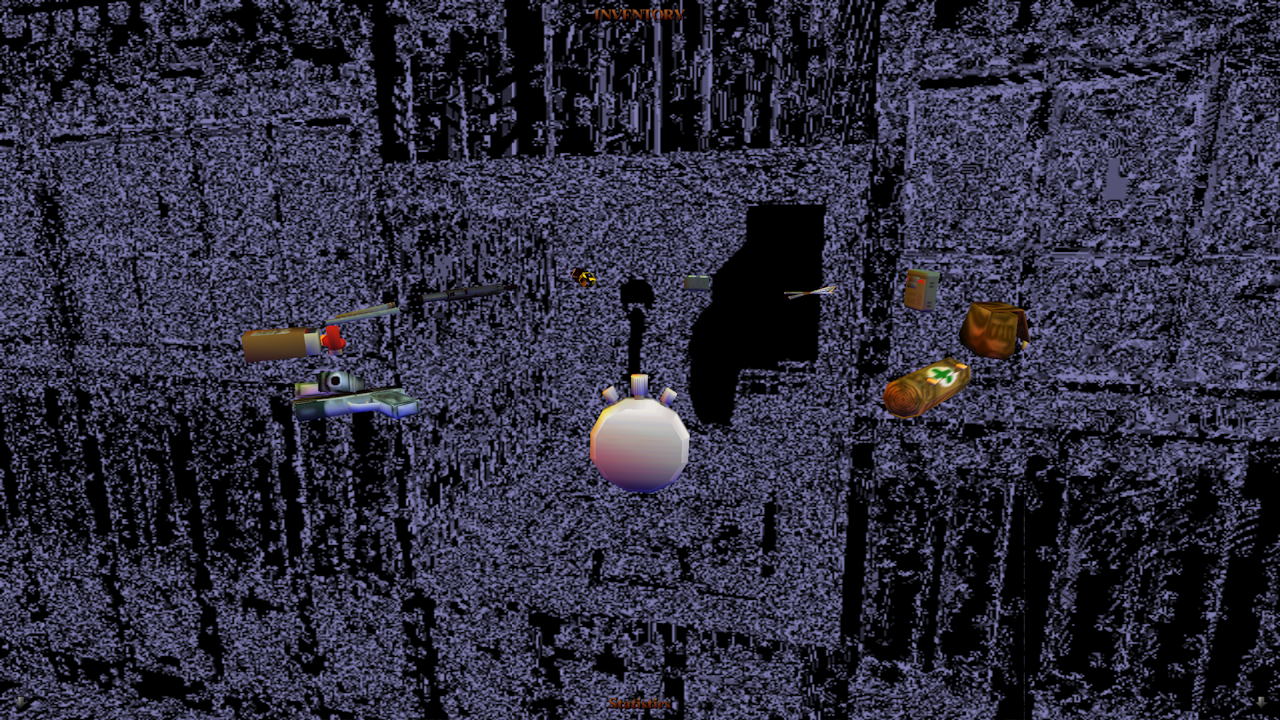

Now of course, using argb2101010 causes the monochrome inventory background screen rendering to break because of the lower alpha bits

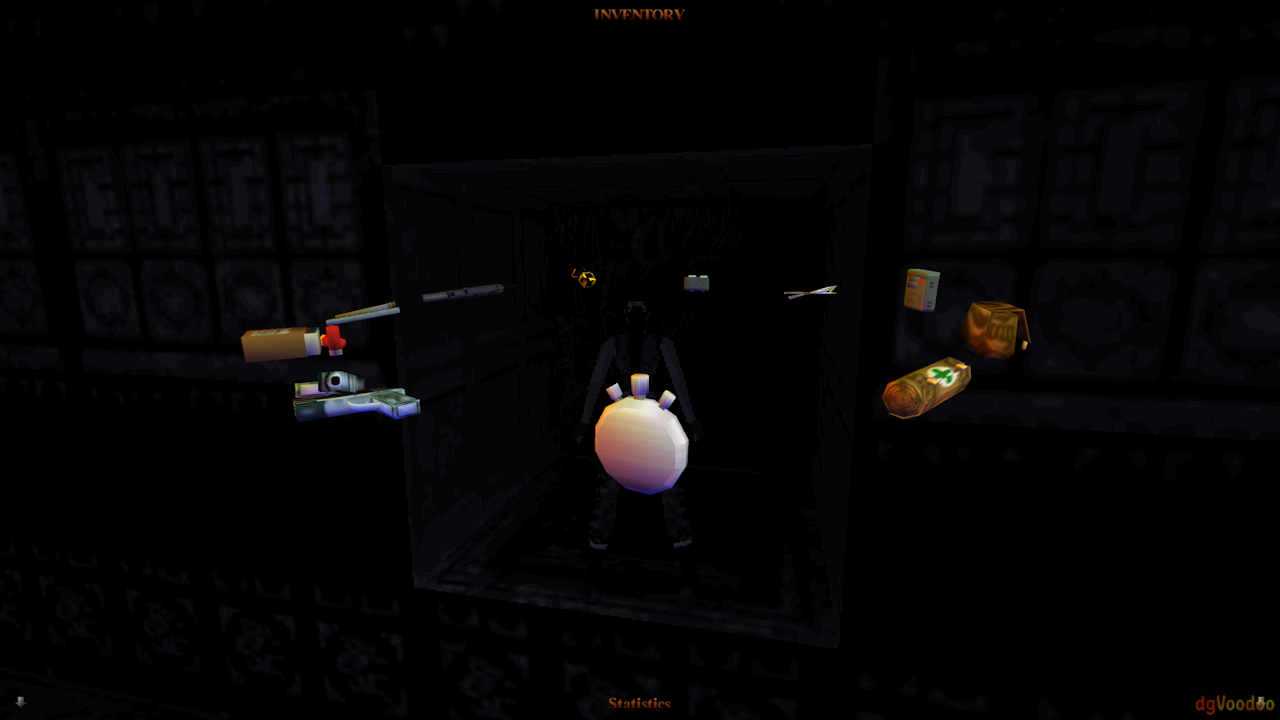

So I think, use argb16161616 instead and the monochrome background render is not broken:

Only thing is, the moment I use argb16161616, the dgvoodoo watermark appears even with it set to off; now if i use argb2101010 or the default: argb8888 the watermark is not present.

.conf attached

dgVoodoo currently does not support argb16161616 at all... So if you write it manually into the config file then it cannot read it (see the debug layer output) and uses the default config instead. That's why the logo is there.

argb16161616 is 64bit in total, and the component bits can be interpreted either as a 16 bit float (_float), 16 bit integer (_uint or _sint) or 16 bit integer normalized into the [0..1] or [-1, 1] float interval (_unorm, _snorm). Traditionally (pre-DX10) the unorm (or snorm) interpretation is used all the time, except for explicit float D3D9 resources.

So, the point is, dgVoodoo could render into argb16161616_unorm targets but at the end it must be converted to argb16161616_float for the display output. That's where some precision is lost, because the 16 bit float in the [0..1] interval is 10 bit precision. But it'd be definitely good to avoid lowered alpha bits.

Dege wrote on 2023-06-26, 18:57:dgVoodoo currently does not support argb16161616 at all... So if you write it manually into the config file then it cannot read it (see the debug layer output) and uses the default config instead. That's why the logo is there.

thank you for confirming this. it only supports argb2101010.

Knowing this, it is in fact best to use argb8888 even with HDR (using flip presentation model at least) since the lack of alpha bits is in fact render-breaking.

Dege wrote on 2023-06-26, 18:57:So, the point is, dgVoodoo could render into argb16161616_unorm targets but at the end it must be converted to argb16161616_float for the display output. That's where some precision is lost, because the 16 bit float in the [0..1] interval is 10 bit precision. But it'd be definitely good to avoid lowered alpha bits.

dgvoodoo could, but currently does not. right

Dege wrote on 2023-06-26, 18:57:argb16161616 is 64bit in total, and the component bits can be interpreted either as a 16 bit float (_float), 16 bit integer (_uint or _sint) or 16 bit integer normalized into the [0..1] or [-1, 1] float interval (_unorm, _snorm). Traditionally (pre-DX10) the unorm (or snorm) interpretation is used all the time, except for explicit float D3D9 resources.

last q: argb16161616 is used in d3d11 and d3d12?

So, yes, argb16161616 is not supported but I'll implement it. If nothing else, it'd be nice for the alpha bits.

Also, argb16161616_float exists in D3D9, so if the hw supports it then it can be used with that as well. But argb16161616_unorm is DX10+ only.

Dege wrote on 2023-06-27, 09:15:So, yes, argb16161616 is not supported but I'll implement it. If nothing else, it'd be nice for the alpha bits.

yes! that'll be good! I eagerly await !

this mode is also supported by ue4's 'scenecolorformat' cvar value 4;

Dege wrote on 2023-06-27, 09:15:Also, argb16161616_float exists in D3D9, so if the hw supports it then it can be used with that as well. But argb16161616_unorm is DX10+ only.

Got it. thank you for the info a lot.

akira77 wrote on 2023-06-27, 22:48:yes! that'll be good! I eagerly await ! this mode is also supported by ue4's 'scenecolorformat' cvar value 4; […]

Dege wrote on 2023-06-27, 09:15:So, yes, argb16161616 is not supported but I'll implement it. If nothing else, it'd be nice for the alpha bits.

yes! that'll be good! I eagerly await !

this mode is also supported by ue4's 'scenecolorformat' cvar value 4;Dege wrote on 2023-06-27, 09:15:Also, argb16161616_float exists in D3D9, so if the hw supports it then it can be used with that as well. But argb16161616_unorm is DX10+ only.

Got it. thank you for the info a lot.

I've added various color spaces into the recent WIP's, for HDR rendering, Wide Color Gamut and such.

Is this working fine? (I couldn't repro that bad scenario with the old ARGB2101010 option enabled):

Re: dgVodooo 2.7.x and related WIP versions (2)