kithylin wrote:

EIST is thermal protection and is totally different from speedstep. EIST should never be touched and left on at all times.

EIST = Enhanced Intel Speedstep Technology. It's the same thing as speedstep - it's power conservation and throttling first introduced with Pentium III, while EIST was first introduced with Pentium M (and technically modern processors have "EEIST" - Enhanced EIST, but that's a mouthful 😵), which not only adjusts frequency in a more granular manner than previous hardware, but an also adjust cache availability and other features to further improve thermals and power draw in response to load.

All speedstep does is change the multiplier up/down based on load.

Not entirely. Depending on the chip it can adjust cache availability, FSB, vCore, etc to reduce thermals and power consumption when the CPU is not heavily loaded (which is determined based on performance state). The original implementation was designed to improve battery life in mobile computers, but it's made it's way into desktop systems to reduce heat and power draw when the machine isn't doing particularly much (and there's nothing wrong with that EXCEPT when you're benchmarking). If your GPU has adaptive clocking that should be defeated if possible for benchmarking as well, but for normal usage these features can (and should) be left in their default-on positions (on many GPUs defeating the adaptive clocking will require the fan to run in a noticeably louder manner all the time).

To give an example from my modern system that has EIST and nVidia GPU Boost, full-clocks for the CPU are 2.8GHz, and the GPU at 1.1GHz, however even in relatively modern games (like Skyrim) it isn't uncommon to see the CPU at 2GHz and GPU at 700-900MHz; on loading-screens and during cut-scenes it's not uncommon to see the GPU drop all the way down to 324MHz (where it idles on the Aero desktop). None of this matters for the game (gameplay is perfectly smooth), but for benchmarking this will usually drop scores because "light load" areas will see the power management kick-in.

All intel chips are designed to run at max speedstep all the time on the stock cooler designed for them with no ill effects.

In theory, in ideal conditions, yadda yadda. There's still nothing bad about running cooler and using less power when the machine doesn't need to be at 100%. 😀

I'm not trying to argue the whole "my CPU needs to be at absolute zero" point here - as long as you're under the envelope maximum it isn't generally a problem, but nothing is HARMED by running cooler where possible, and the power savings while not dramatic on a per-user basis, become meaningful when spread out across the millions of machines deployed.

Imagine if someone was doing an animation render job and left the computer on 24-7 for a few months, it would be at max speedstep (and max load) that entire time.

This is a minority usage scenario, and render farms are generally designed and built to handle 24x7 loading in terms of heat and power handling. They're usually fairly loud setups as a result. At-home systems is another story altogether, and standard parts aren't generally designed with 100% 24x7 duty cycle in mind (and you can find plenty of stories about F@H, SETI, coin-mining, etc killing hardware prematurely due to heat).

They may run -slightly- hotter and use a smidge more power but if they're not overclocked, the difference is negligible and the performance gains are huge. In general anyone that owns an Intel CPU not running it at max speed is missing out on what their chip is capable of, this goes for older core2duo chips and even the modern ones.

Not true. The difference in power draw and temperatures can be fairly dramatic on the newest hardware, and the performance gains are not noticed outside of benchmarks - if the system is being loaded enough to need "full power" it will get "full power" (and on chips with Turbo they'll even go beyond that as long as it's within their thermal envelope). What it will do as a benefit is let the thing run cooler, quieter, and lower-power-draw when it's just sitting on the desktop or checking email or doing any of a number of other tasks where a modern CPU only sees a few % load. There is no good reason to waste power, run hotter, etc in those situations. Even if the cooling solution is capable of dealing with it.

To give you a modern (and somewhat extreme) example, look at nVidia Kepler GPUs with GPU Boost:

http://www.techpowerup.com/reviews/NVIDIA/GeF … X_Titan/25.html

http://www.techpowerup.com/reviews/NVIDIA/GeF … X_Titan/34.html

CPUs generally aren't quite so dramatized, but 20-40% power savings aren't unreasonable with EIST (or AMD's equivalent), along with the associated reduction in heat output and (potentially) noise. 😀

And besides that, the maximum temperature these chips can handle is 95c before shutting off. It's almost impossible to hit that on an air cooler with a stock (not-overclocked) chip, even with the stock cooler.

You've obviously never had to clean 3-4 years of cat hair out of grandma's old Dell Inspiron... 🤣

Also, for the processor originally mentioned (Core 2 Duo E4300), TCase is 61.4* C per Intel specifications (http://ark.intel.com/products/28024/Intel-Cor … 2%20Duo%20E4300).

Some other random processors I looked up on ARK:

Core i7 920: 67.9* C

Core i7 4770k: 72.7* C

Core 2 Extreme QX9770: 55.5* C

Pentium G3258: 72* C

Can you point out a specific example of a chip that has TCase at 95* C or higher? Or that is documented running continuously at 95* C 24x7 in a 100% loading scenario and not failing?

Again - I'm not disagreeing with you on defeating power management features on a desktop for benchmarking; it can have an impact on the resulting scores*, however for normal use (including for gaming) those features harm nothing by being left on, and will generally reduce heat, noise, and power draw by doing their job.

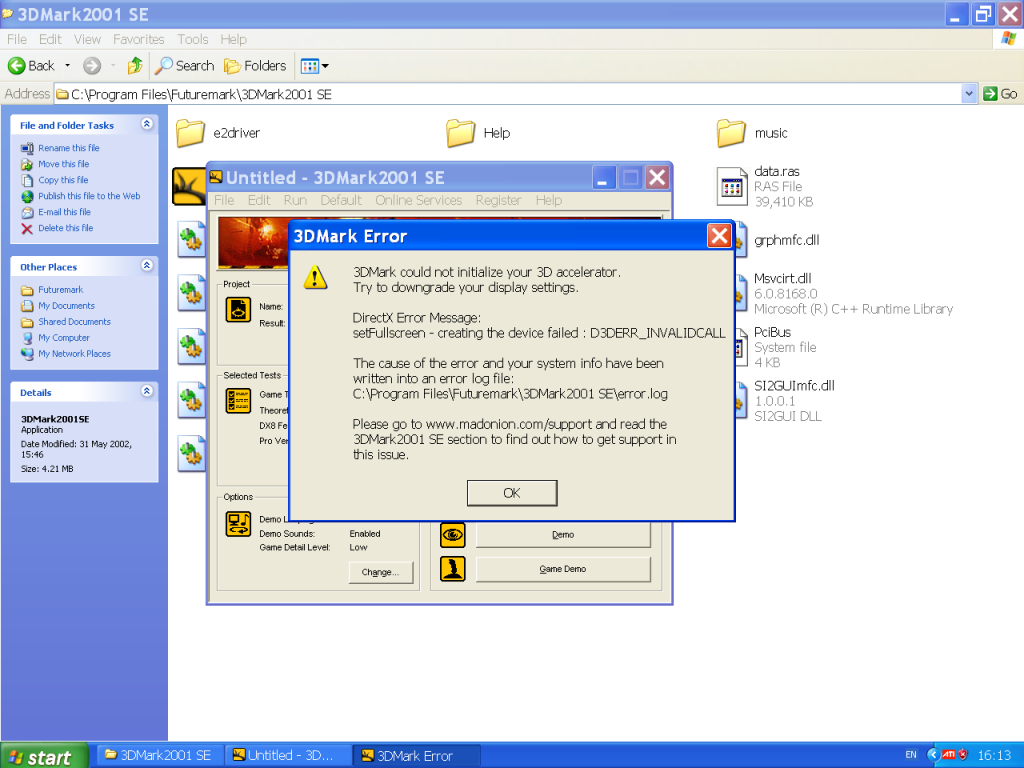

* As far as the "why" for those interested: generally benchmarks like 3DMark, AquaMark, Uniengine, etc attempt to emulate real-world gaming loads so they will have variable complexity throughout the run of the suite, and the load on the CPU/GPU will accordingly go up and down in response. With power management enabled the low loading sequences will see the CPU/GPU drop into lower power states, and while this will still produce playable frame-rates, you won't get the absolute highest frame-rates possible during those sequences, and in many cases it's those "high climbs" that can beef up a score (they run up the overall FPS average for a test, the entire run, etc). In gaming the same effect happens, however in gaming we generally don't care about achieving the highest possible FPS, only a playable frame-rate (whatever that's defined as for your specific application), so anything extra is irrelevant, whereas in a benchmark that "extra" can mean a higher score.

For those that are curious, open-up CPU-Z and/or GPU-Z and watch their clock, vcore, etc readouts while you run a benchmark or a game to see this effect in action (ofc you have to have hardware/software that supports power management, and has it enabled).