First post, by swaaye

- Rank

- l33t++

Thread is about comparisons of GeForce and Radeon texture filtering. Mostly regarding DirectX 9 and later cards.

---

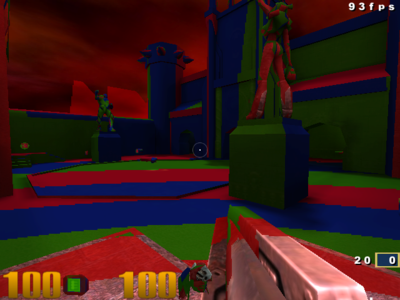

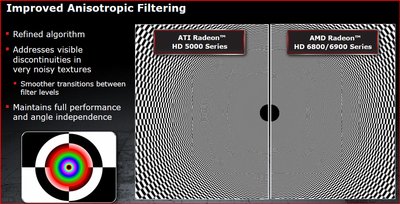

Radeon HD cards have a texture LOD bug with Source engine that causes extreme texture aliasing. It's basically just way too sharp. This occurs in at least HL2 and Dark Messiah of Might & Magic. You need to drop its mip map quality down to performance in the control panel. From what I understand this makes these cards behave more like NVidia. I did some web searches and apparently this issue had some controversy back then. It's not limited to Source but there is something extra special going on there. I am not sure how the 5000 series behaves.

You will also certainly run into OpenGL issues with older games and a Radeon. Especially after Catalyst 7.11 (which can only be used with 3870 and older). 7.12 has an overhauled OpenGL driver that removed some old extensions. It actually also is a breaking point for bump mapping with the D3D8 Star Wars Republic Commando.

The GTX 580 is an excellent card. Super fast and quiet. I did find that I needed to use older drivers for MSAA to work with HL2 though. It can obviously run quite a wide range of driver releases and it conveniently works great with the last driver released for the 8800 series which I also played a bit with. No visual issues with these cards.

![4890 Cat 13.1.mp4_snapshot_00.00_[2019.09.21_13.38.24].jpg](./thumbs/42_d25a9d973ca864136c2ad90034d47e89/4890%20Cat%2013.1.mp4_snapshot_00.00_%5B2019.09.21_13.38.24%5D.jpg)