First post, by 386SX

Hi,

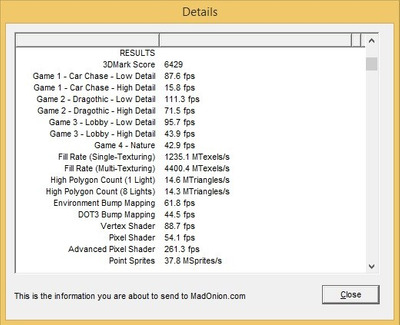

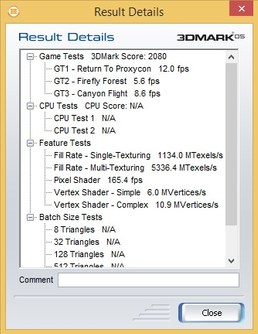

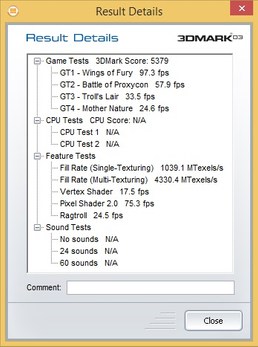

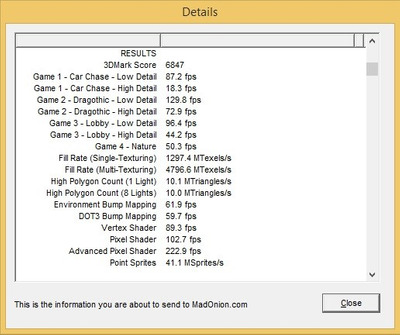

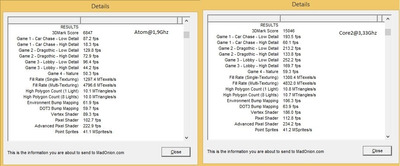

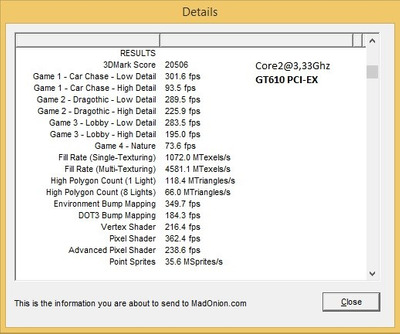

in these years I found some of the latest unusual PCI video cards like the Radeon 7000 PCI, the Geforce FX 5200 PCI, the Geforce 210 PCI and the latest found Geforce GT610 PCI and some having strange results.

The early ones like the Radeon 7x00 / FX 5200 both have a double chip ICs or what seems to be that for the native AGP GPUs and intended for old PCI mainboards bus while the modern Geforce 210 (GT218) has a PLX 8112 and the GT610 a PI7C9x bridge chip. The 210 card I tested for quite some time results in strange behaviours with latency problems other times interesting results but still mixed generally depending of the scenario.

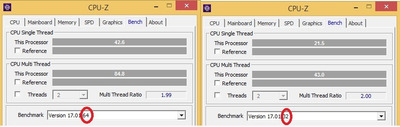

I think in my tests the combination with the mainboard PCI-E to PCI bridge inside the chipset NM10/ICH7 - 82801 Mobile PCI Bridge (in an Atom minitx) make a "castle" of translations that gives these problems. On a synthetic benchmark increasing the PCI latency timer increase results not improving the general experience anyway even for web pages or the GUI itself but at the same time a 1080p H264 60fps test video is decoded without problems by the GPU with minimal cpu impact.

I suppose the PCI bus itself wasn't the real problem as it wasn't back in the AGP times but maybe these not-native hw bridges combinations that increase the latency. Soon I'll receive the GT610 PCI card to test.

Do you have any experiences with bridged PCI video cards? I watched the youtube PixelPipes review about these last solutions and I begin to think that it's not all about the PCI bus itself but maybe the PCI bridges imho the point to focus in when in addition to the not-native latest PCI mainboards they were built for and tested on. Maybe a native PCI mainboard with the same video cards would perform better.

Thanks