r00tb33r wrote on 2022-11-03, 23:54:

I've been wondering why most EGA cards weren't made for 16-bit ISA, as they debuted in AT systems with 16-bit ISA bus. Considering the amount of memory (128KB or more for 16 colors at 640x350 resolution) an EGA card can have it's odd that they were given only an 8-bit bus.

The EGA was intended to be an upgrade path for PC/XT users as well as a display card for the AT. The EGA card really shines at what stepwise upgrades were possible:

- You have an MDA + IBM monochrome monitor? Just buy an EGA, and you keep the excellent 80x25 monochrome text mode at 720x350, and get an extra 640x350 graphics mode. You can buy the Enhanced color display later when you can afford it.

- You have a CGA + IBM color display? Just buy an EGA, and you keep all CGA modes, as well as getting 320x200 and 640x200 at 16 colors. You can buy the Enhanced Color Display later when you can afford it.

- Your IBM color display broke? Just buy an Enhanced Color Display as replacement. It will still work with your CGA card, and you can upgrade to EGA any time you can afford it.

As soon as you were proud owner of both the EGA card and the Enhanced Color Display, you could enjoy 16-color text modes at 640x350 and high-resolution graphics at 640x350, but every step towards getting there made perfect sense and used the available hardware to the best it can do. In a CGA/MDA dual-display setup, you can replace either one of those cards by an EGA card to get the benefits on that card, as mentioned above. You can't use two EGA cards to replace both the MDA and the CGA card. (Well, technically you likely can put two EGA cards into a single computer by jumpering one of the cards to its "alternate base address" 2B0..2DF, but you won't have any BIOS support for it, and you need to make sure that you don't get bus conflicts when you try to read the BIOS.)

r00tb33r wrote on 2022-11-03, 23:54:

There are lots of dual port VGA/EGA cards that are 16-bit. Or is the EGA circuit always 8-bit?

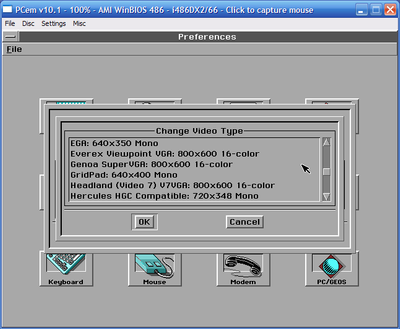

Both the EGA and the VGA circuits are designed around an 8-bit bus interface. The original IBM "video graphics array" chip (their first graphics card in a single custom chip), which was introduced with the IBM PS/2 Model 50 and available on the "PS/2 display adapter" ISA card also had an 8-bit bus interface. The programming model for most advanced capabilities of the EGA/VGA in 16-color modes requires cycles to be performed with 8-bit access. The modern 16-bit VGA cards either reject 16-bit cycles in configurations don't provide straight-forward video memory access, or they buffer the cycle on the card and execute them as two 8-bit operations (at a speed independent of the ISA clock, as fast as the card can do). CGA modes and 256-color mode (without special programming tricks) are 16-bit friendly, so likely the first generation of 16-bit EGA/VGA cards only supported these modes with 16 bit.

Anyway, we got 16-bit graphics cards as soon as chips got complex enough to emulate an 8 bit graphics card without losing all the benefits of the 16-bit slots. And at the time we got there, VGA cards were considered standard. Especially as VGA is just an incremental upgrade from EGA (the actual design is very similar), building a third-party VGA card that can "emulate" EGA was not that much more expensive than just building a third-party (super) EGA without VGA features. Supporting VGA made the card appealing to a lot more customers, so building a 16-bit EGA-only board made no sense at that time.

r00tb33r wrote on 2022-11-03, 23:54:

I also wondered why they had chosen 350 lines, and those exact timings, as that wastes a good bit of the memory page. 400 lines utilizes the memory page better, 3rd party "super EGA" cards did just that. Also forced into the weird 14-pixel ROM fonts.

The main point of EGA seemed to get "the best of both worlds" (MDA and CGA). MDA had this selling point of high-quality text at 720x350 pixels, using a 9x14 font, whereas CGA had the selling point of 16 colors. So the design target of EGA was to obtain 16 colors at 350 lines. And that's what they did. The main design results were:

- MDA display was flicker-free at 50Hz, but only because of a monitor that had quite long persistence (the technical term is "medium-persistence phosphor"). This kind of phosphor is inappropriate for animated (color) graphics, so we need a higher refresh rate for EGA. CGA already had 60Hz refresh, which proved high enough to be accepted for use with monitors with short-persistence phosphor.

- MDA uses 16.257MHz dot clock. To save cost, we try to use the same clock for the high-resolution EGA modes, but unless MDA, the goal is 60Hz instead of 50Hz. That's why EGA went for 640x350 instead of 720x350 in text mode (and downgraded to 8x14 from 9x14).

- For CGA compatibility, we can't get rid of the 8x8 font. For MDA compatibility, we can't get rid of the 9x14 font. As the EGA doesn't use a dedicated character generator ROM, the fonts have to be stored in the BIOS ROM. In fact, EGA stores an 8x8 font, and 8x14 font and a list of characters to replace in the 8x14 font if it is running in MDA-compatible mode. A very notable replacement character is the "m" character. IBM uses two-pixel wide vertical lines in their default fonts, so the m would need 6 white pixels for the three stems, seperated by one black pixel each, making 8 pixels in total. This means the m would touch both ends of the character box and subsequent m characters would run into each other. To avoid that effect, the 8-pixel-wide m character uses only a single pixel wide central stem and an empty space in the 8th pixel. The 9-pixel-wide m doesn't have this issue and can be displayed with even stem width. Re-using the 14-pixel ROM font instead of adding a 16-pixel ROM font saved 4KB of ROM space. That's 25% of the 16KB BIOS ROM on an EGA card.

r00tb33r wrote on 2022-11-03, 23:54:

Has anyone built a discrete hobby clone of an EGA card? Is using a 6845 CRTC possible with additional chips or is EGA too different?

The MDA and CGA cards shows the limit on what you can achieve with an integrated CRTC and performing all other functions with discrete chips. Both of those cards were taking the maximum available space on an ISA card. Granted, they were thru-hole, and we can save space today using SMD chips, but that would be (estimated) just a factor of two in kind of complexity. The logic of the EGA card is way more complex than that of the CGA or MDA. If you want your head to blow up, just try to understand what the graphics controller can do and how you would try to implement that with discrete logic. Note how IBM's EGA also was a full-sized board with just 64KB of RAM, and needed an upgrade board for the full 256KB of RAM. The RAM problem would be solved today, as we don't need to build the video RAM from 16KB x 4 chips. Today, we can just use four 64KB x 4 chips, so the 256KB of RAM fit where IBM just managed to place 64KB. But EGA was only possible because the remaining logic was consolidated into ASICs

- The CRTC, while 6845-inspired, is different enough from the 6845 that you can't emulate it using a 6845.

- The Timing Sequencer, generating the clock signals and memory access signals at the correct timing

- The Attribute Controller, managing the 64-color palette in high res modes, the border color and the blinking

- The Graphics Controller, interfacing the video memory at a width of 32 bits, and mapping it to an 8-bit ISA bus as well as generating sequences of 4-bit colors to be sent to the attribute Controller. As IBM was limited to 40-pin DIP chips, the Graphics Controller was implemented 16 bit wide, so they placed two of the Graphics Controller chips onto the EGA card. And that why we have these strange "graphics position 0" and "graphics position 1" registers on the EGA: These registers are used to initialize the graphics controllers and tell each one whether it is meant to handle the low or the high 16 bits. All other graphics controller registers always access both controllers at the same time, so they need to be set up correctly to work as virtual 32-bit graphics controller.

I don't see how you could manage to put all the stuff IBM integrated into these chips on a single ISA board using just 74-series logic. Even if you could re-use the 6845, you won't fit the graphics controllers onto the board.

EDIT: fixed quote formatting