It's not just Intel iGPU. All modern GPUs start breaking VGA/VBE functionalities bit by bit. The later the generation, the worse the VGA BIOS.

Well, first of all, please correct me if I am wrong. But to my knowledge the VBE is not a part of the graphics card but a part of the BIOS. The BIOS has a graphics driver which provides very basic support for a few graphics standards. This means that if you use a graphics circuit by a less known manufacturer then VBE won't let you do anything but maybe what can be done with VGA. The support is limited not only to those few graphics standards but it is usually also limited to mode setting only. So if a software uses the BIOS Extension defined by VESA then there is usually no acceleration available. Not for 2D drawing like area filling, line drawing etc., not for 3D and not for video playback. With VBE 3.0 2D acceleration was introduced in a limited form but not every BIOS implemented it. The BIOS has also a standardized interface to access the functions of the graphics driver. This interface is called VBE. So it's not a question about whether the graphics circuit supports VBE (because it doesn't care who sends the instructions) but rather a question about whether the BIOS supports VBE, the graphics card and how good the implementation of the BIOS extension is.

Another thing is, that on modern hardware there is often no more BIOS. The BIOS was replaced by some motherboard manufactures with EFI. That was about in the year 2010. Not long afterwards EFI was replaced by UEFI. So, yes, the support for VBE is dropping because UEFI has a different graphics extention. Those new graphics extentions have a decreasing lifespan until they get replaced by something new.

... in some cases, turning on CSM could lead to totally black screen

When you referenced CSM, did you refer to cascaded shadow mapping? If this technique isn't implemented in software then you probably can't use it with VBE. First it would need to be part of the VBE definitions (my knowledge about the accelerations VBE 3.0 offers is limited) and second the BIOS programmer would need to implement it.

I wonder if it's really possible to somehow patch/replace the broken VGA/VBE functionalities universally...

Well, if you want to hack into VBE to fix it, then yes, it is possible. To call a VBE function the software triggers a software interrupt. The jump address (interrupt vector) can be read and overwritten. So a software which does the hack can read the jump address and then overwrite it, so that the next time when the interrupt is triggered a function of the hacker-software is called. The hacker software can then decide whether it satisfies the request itself or passes the request forward to the VBE. So you would be creating a VBE emulator similar to the VGA emulator some graphics drivers provide with their VDM routines. But you would need a universal graphics driver as a backbone to execute the requested functions. And that's where the driver from the fan project might come in in the future.

Can you tell me more details about the fan project in question?

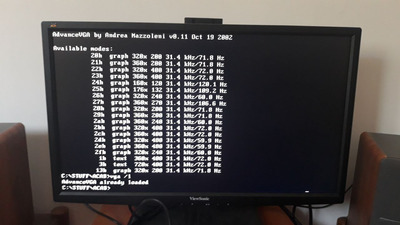

The graphics driver of this project supports multiple graphics standards (at the moment just VGA but GenX is next in line). With this approach it can handle a lot of graphics circuits because many support at least VGA and then one additional manufacturer specific like Intel's GenX. The project releases a video presentation about it's progress from time to time.

The presentation 001 was about how to get Windows 2000 up and running on modern devices

The presentation 002 was about version 1 of the universal graphics driver.

The current version of the driver is version 12 and is already capable of detecting Gen7LC capable devices (but only detecting the support for the graphics standard; it can't use this graphics standard, yet).

If you like then you can have a look my Odysee-channel where I mirrow low quality versions of the presentations. But the driver is not a ready to use solution for gamers. The development started not long ago and features and support come bit by bit with the time. Since the initial requestor asked about a recent project in this area it is the closest I could think of.

https://odysee.com/@startmeup:2?view=playlists