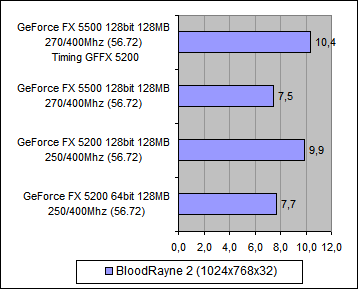

Takedasun wrote on 2023-11-29, 12:00:BloodRayne 2 has the most neglected case, other games have an average difference of 5-20%.

I only did research on these two vide […]

Show full quote

Putas wrote on 2023-11-29, 09:25:

Takedasun wrote on 2023-11-29, 08:49:

In some games, the difference can be as much as 30%.

Holy Moly, I did not expect anything that big with these mature cards. Do you know other such cases?

BloodRayne 2 has the most neglected case, other games have an average difference of 5-20%.

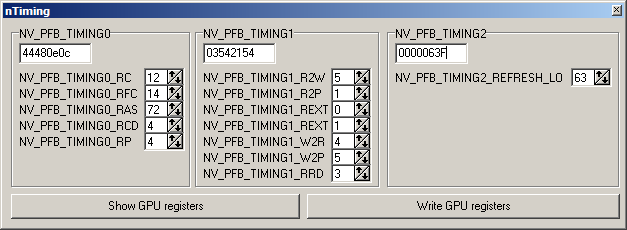

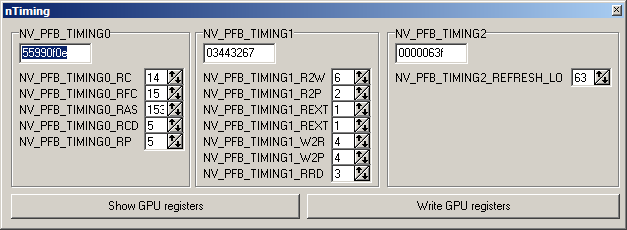

I only did research on these two video cards. Because I noticed an anomaly in the test results.

There are advantages of bad timings, video memory can be easily overclocked up to 550MHz.

I also never saw such huge differances, not only because of memory timings, but even frequencies, and that even in big differances (like 400mhz vs 600mhz).

30% differance in performance occurs usually between 128-bit / 64-bit (memory bus width) version of same card .. in that case, bandwidth of memory is doubled (on 128-bit version), and you usually get only up to 30% of performance from this huge impact.

When you change timings, that usually means only like few megaherz memory differance. For example, I've tested recently DDR3 vs DDR2 memory on same graphic card, what differance will make differance of timings (DDR3 has usually much worse timings than DDR2). Differance was only about 3% in 3dmark 2005.

Graphic card underclocking questions, memory bandwidth DDR2 vs DDR3 (solved)

In other words, DDR3 version, had to be clocked at 510Mhz with worse timings, instead 480Mhz (DDR2 with better timings).

I am suprised, that there are games/benchmarks, that will cause such big impact, and will retest it. Maybe, when there is memory pure bottleneck, can differance increase much more, but still... I think there will be something other in this case, than just worse timings. Maybe original FX5500 BIOS is corrupted? And timings are unreaslitic bad? Never saw such huge differance, it would be seen in official benchmarks and reviews, where there are usually tested even 10-12 different cards with same core and same (or similiar) frequences. Never saw that one card have like more than 10% of performance of other card (of same type, and frequencies).

And particualary at Geforce 2 - 6 generation, I remember, how all cards were very even and differances in graphs were almost non-existent.

That's why, I am suspicious, that that original FX5500 card is somehow screwed... or its BIOS.