386SX wrote on 2021-05-10, 09:57:

It wasn't easy to attach to the "22bit train" if people actually could only "see" 16bit or 32bit in games;

People buying new card couldnt see squat, all they had at home was some S3 Trio or worse an ISA card in the old system. Most didnt even know what >20 fps felt like in a game. All they had was marketing in game/hardware magazines, salesmen pushing own sales quotas, and friends arguing for whatever they got sold on. Nvidia scam worked by pushing "32bit is important" narrative while their own cards were unable to play games in 32bit with playable framerates 😀. 32bit mode tanked fps by >half on TNT and ~1/3 on TNT2 (less on TNT2 Ultra), You had a choice of 16bit at higher resolution, or playable 32bit at 640x480. https://www.anandtech.com/show/378/5

The other fact was Nvidia 16bit rendering looked really bad, and propagating this "32bit is important" lie often equated 16bit rendering with this peculiar bad Nvidia look.

3dfx failed to send strong enough message to separate itself from this '16bit looks like poop' stigma. They also failed to anticipate this marketing angle and implement pointless box ticking 32bit framebuffer in hardware as a showcase option just to demonstrate no difference in rendered output on their hardware. It would only cost just a little bit of performance, because unlike nvidias tnt/tnt2 voodoo3 did all the math at higher bit depths internally. 16bit was a ram size optimization mainly, saving ~1MB of framebuffer (important at the time of 16MB cards), it merely saved ~120MB/s of its >2000MB/s ram bandwidth (stupid and unimportant).

Another option would be implementing dither filter in the driver framebuffer read-back routine in 32bit mode. Call it some catchy hybercolorcompression marketing wank name and there you go, checkbox ticked, screenshots look good out of the box.

3dfx was all about those clever "where it counts" optimizations, but failed to properly advertise them.

386SX wrote on 2021-05-10, 09:57:

also I remember testing the "22bit option" and trying running Quake3 and the differences were really far from visible. The 32bit internal tech of the Kyro2 compared to that was like another planet.

you could even say it was like from the future, 2 years in the future exactly 😁

Kind of not applicable to a card released in April of 1999. In 1999 Voodoo3 "16bit" output looked like TNT2 32bit while running at TNT2 16bit speeds.

386SX wrote on 2021-05-10, 09:57:

About the Motion Compensation, it is true that a Pentium II high

I dont know what the lowest bar is for software rendering, but overclocked Celeron was definitely enough.

Celeron 300A@450 on 440BX will play all DVDs with no sweat in pure software mode. $90 CPU from 1998.

http://edition.cnn.com/TECH/computing/9812/31 … .idg/index.html

>Celeron 333-MHz and "300a" 300-MHz models for $107 and $90

>Slot 1 Celeron-333 was listed at $115, down from $159, and the 300a version was listed at $94, down from $138

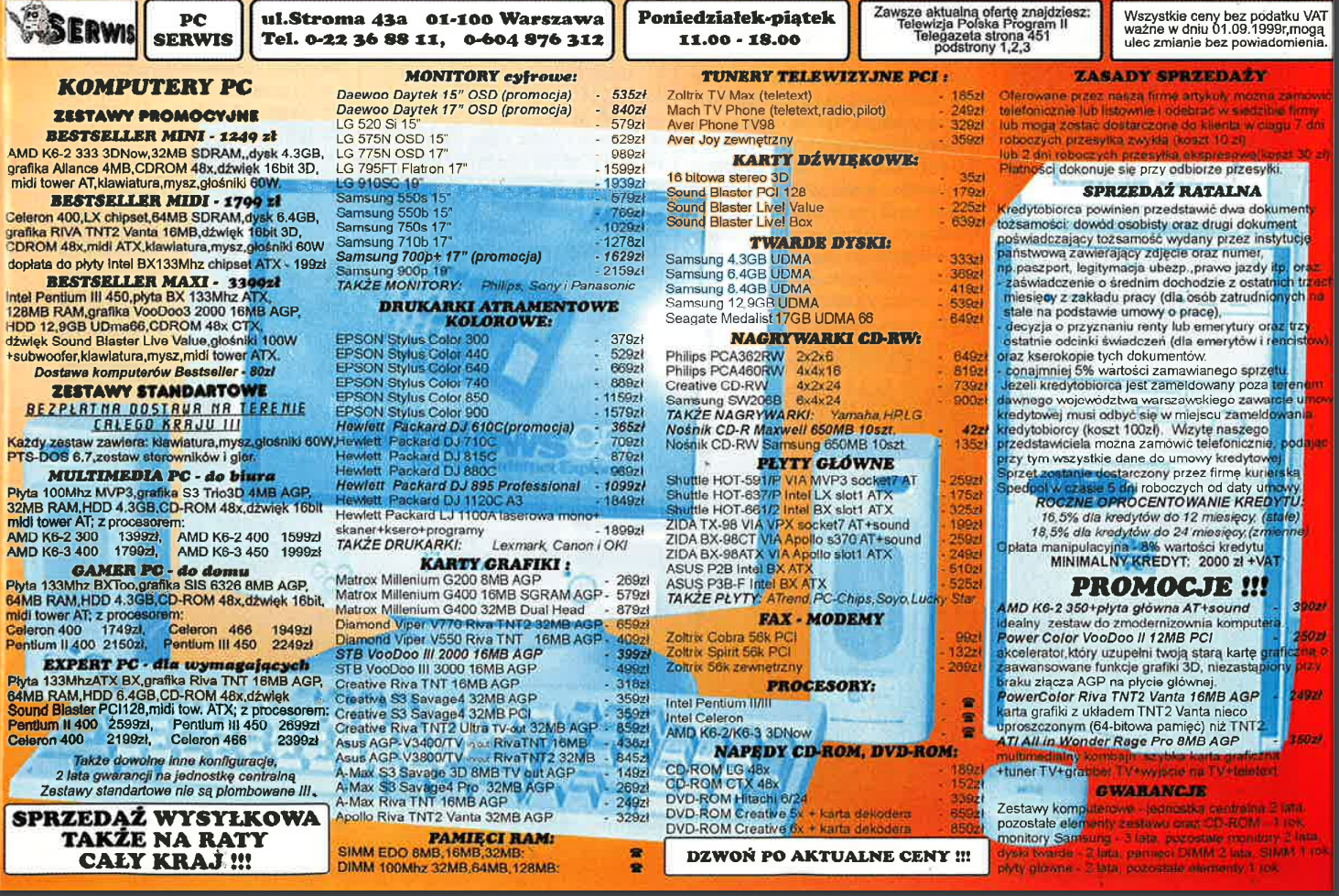

https://www.theregister.com/1999/02/08/amd_to_call_k63/

>K6-2/300 will cost $65, the K6-2/333 $75, the K6-2/350 $90, the K6-2/366 $96, the K6-2/380 $123, the K6-2/400 $138

>K6-2/450, now officially launched, $210

August 1998:

K6-2 350 MHz announced at $300 http://www.cpushack.com/CIC/announce/1998/k6-2-350.html

Celeron 300A announced at $149 http://www.cpushack.com/CIC/announce/1998/pentII-450.html

February 1999:

K6-2 450 MHz announced at $200 http://www.cpushack.com/CIC/announce/1999/k6-2-450.html

You had to be locked into Socket7 ecosystem, uninformed, positively insane or an extreme cheapskate (I can "save" $30 on the motherboard!!1) to buy AMD at the time.

386SX wrote on 2021-05-10, 09:57:

but you already confessed to owning low end "K6-2 350". You absolutely needed >$100 in hardware to play DVD because you decided not to spend that $100 for a better CPU 😀

386SX wrote on 2021-05-10, 09:57:

even hardware decoders PCI ones I still own, at the end the best tech on gpu was the one on ATi chipset that since the Rage 128 Pro and Rage Mobility introduced both the Motion Compensation and IDCT

again would love to see different video acceleration solutions impact on CPU usage. I know that Rage Pro with MC is useless when combined with Pentium 2 300MHz.