First post, by Kreshna Aryaguna Nurzaman

- Rank

- l33t

Well, I'm talking about the 16x Fragment Anti-Aliasing originally used by Matrox Parhelia. The AA method only takes "fragment pixels" (pixels on the edge of an object) and then collects 16 sub-pixel samples for AA purpose.

Theoritically, this method means way bigger sample size with less fill rate penalty, especially since fragment pixels typically account for less than ten percent of the total amount of pixels displayed on the scene.

So theoritically, the Fragment AA method allows bigger pixel size with less performance drawback.

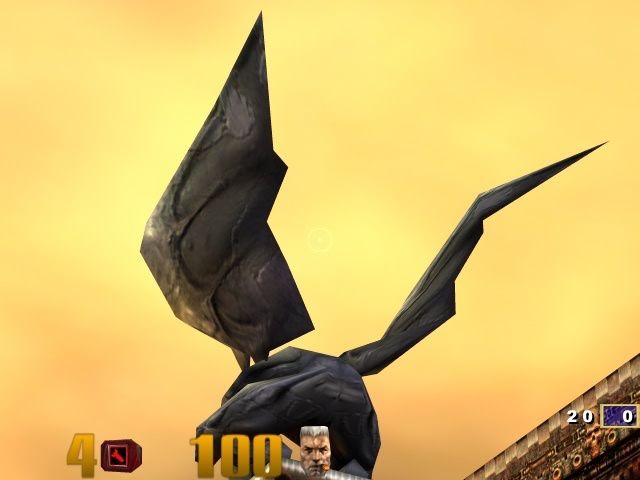

Judging from the picture below, this method produces razor sharp edges, which is very clean. I think this is because sample size is always more important than sampling pattern when it goes to AA quality, and edge AA allows way bigger sample size (16x compared to typical 4x or 8x used in conventional FSAA method).

(image copied from First Look: Matrox's Parhelia-512 graphic processor article on Tech Report ([url=tttp://www.techreport.com/reviews/2002q2/parhelia/index.x?pg=9]Page 9[/url])).

The question is..... why neither ATI nor nVidia use this AA method? Today's video cards have much more processing power than those of Parhelia's era, so imagine the beauty of 64x Fragment AA or such. Or how about enabling AA with very little performance penalty?

What are the problems with this 16x Fragment AA method, so neither ATI nor nVidia have adopted it? Okay, granted Fragment AA does not eliminate texture shimmering (unlike conventional FSAA, which elminates both edge aliasing and texture crawling), but there is Anisotropic Filtering for such purpose.

I have to admit that I missed Parhelia the first time it came around, but did anyone ever have that card? Based on your experience, did you find many problems with Fragment AA so it's actually not worth it?