Hello, I would like to react for this original thread "worst video card ever" Worst video card ever

because it is locked. I didn't read this thread tho, but I have to stand for defense of FX5200. It is not bad card, and definetly don't deserve worst card ever title. It's at the best "below average".

I've revealed, why there is so many mixed opinions, but that's not fault of "FX5200", but card sellers. They've masked they've crippled it, and Nvidia never should allow that, or wanted, so it is mentioned, that card is 64-bit, and not 128-bit. The card sellers could manipulate through this, and sell these cards crippled, what costed them less to make such card. The worst company in those time, what made it, was definetely Gainward. It was masked their product with good pro design, box, and names, as Powerpack! Pro/ 660, TV DVI etc... but most of them were cripled, and you've needed to know, what to pick. I didnt was caught by FX5200 with this marketing, but happened to me in MX440, where I just choosen one of the Geforce 4 MX440, not cheapest (MX420), but not too expensive, as I didnt have much money in 2002. And all those names looked so pro, so I've just choosen some of the lower ones MX440, and of course, it was very thin, 64-bit version, which I've revealed 15 years after it, when I started to care about retro components, building old builds, remember, what cards I had. It was nice red thin card, I was suprised Im buying Geforce 4 and so small tiny, lightweight card appearead out of the box. Well... I was scammed. But didnt know much about hardware in that time, and fallen into this trap.

And that's exactly, what happened with FX5200!!! Some cards are not only 64-bit, instead 128-bit, but even 333 Mhz or 266 Mhz DDR at memory, instead of stock 400!!! And Nvidia should definetly make them allow only in separate name, like FX5200 LE or something. This is the reason, why many people, mostly in young and teenager age in that time of 2003, who didnt know much about hardware, just bought FX5200 and was dissapointed , bashed it hardly, and telling, it's slower and worse than Geforce MX440, or Geforce 2 Ti. Which is not true.

No tell for that table of FX5200 cards from GPuzoo... and find Gainwards one. You can definetly see, they made most of crippled versions, with 64bit bus and lower than 400 mhz memory.

https://www.gpuzoo.com/GPU-NVIDIA/GeForce_FX_5200.html

What is worse, those cards are often "thick" variants, so look so large, so compelte, as 128-bit versions. (usually 128-bit versions were thick, and 64-bit version were thin , PCB-size wise). But Gainward masked them, so they've looked very good (often solid state capacitors), thick PCB, nice design, pro name.

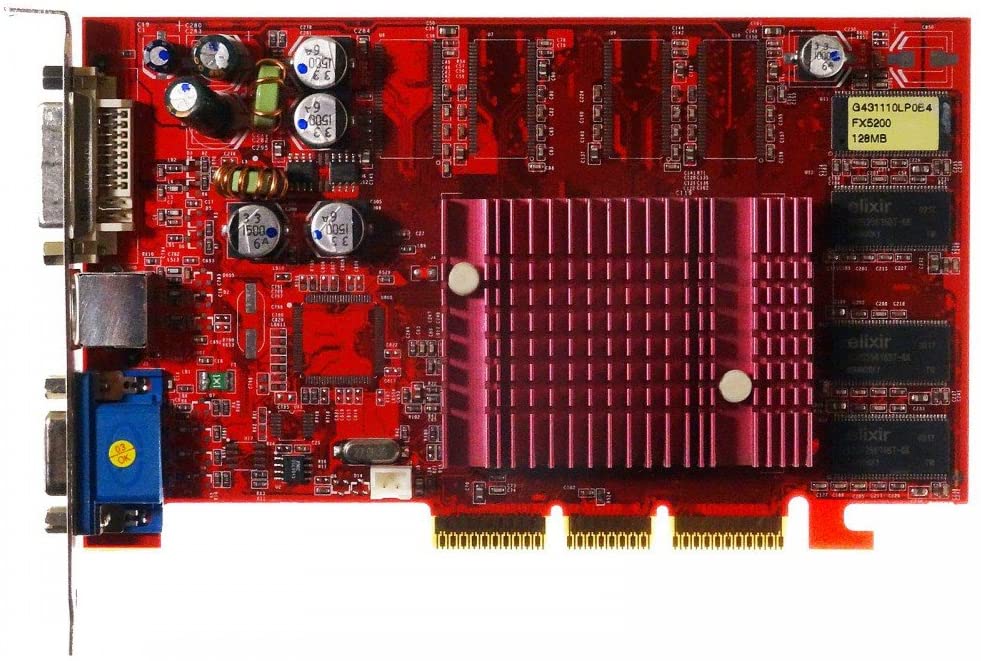

Like, this card doesnt look like slowest, most crappy FX5200 ever made, right?

https://www.cnet.com/products/gainward-fx-pow … fx-5200-128-mb/

But it is.

When we look at specification, no matter that it is thick, and look so pro, also it's name, acording GPUzoo , you see, that it has 266 Mhz memory.

And yes, I have one. I've bought it as my first FX5200, to have just one, I've paid even 2x more than later for other FX5200, and it worst FX5200 ever made.

I didn't understand, how could it have -75B on the memory, when it should be 400 Mhz on FX5200... but now I understand. -75B on memory means 7.5ns, which is rated at 266 Mhz... 🤣, so I've got caught again, and again, on Gainward. So dangerous to buy cards from this company blindly. So many companies, and I've choosen blindly to buy Geforce 4 MX440 in 2003... of course was feeling, its so slow for its price. And it shouldn't. Non-crippled versoin of MX440 was decent.

And same story it is with FX5200.

Now look at the best version of FX5200, some versions had -4ns memory, althought they are clocked at 400 Mhz, you can be sure, you get well over 500 mhz with them. And it was way to go. To buy 128-bit and -4 ns memory version, just go for it. They usually were only tiny more expensive, than other versions.

And FX5200 had DX 8.1 support, and was good overclocker, as low end cards usually can be overclocked so much. So in this case of best FX5200, we can get overclock usually in range of 300-315 Mhz core and 550-580 Mhz memory. Which is just after FX5200 Ultra. Btw FX5200 Ultra was not soo good overclocker, and often could not be raised by much. So with these FX5200 overclocked, you were like 5-10% behind FX5200 Ultra at stock speed, which is not bad for budget bard.

So this is, what made so many controversy about FX5200, and why it was marked by some people as worst card ever. But its not, it were the cheating sellers and their ignorance... also lack of knowledge about overclocking. For someone, who knows the stuff, and pick -4ns memory FX5200 and 128-bit, he could expect close to FX5200 Ultra performance, which was not bad for budget, and "bottom of line" card. I would say, it was good card. Not best, but not bad, or average. For the price, it was decent.

Now, you see, companies like Gainward, were definetly hiding the negative staff, making to look card, and box so pro, while masking, most of their cards were 64-bit, and (266 Mhz memory instead 400). I'm really sorry for people, who have fallen to this trap, buying pro-looking wide version of FX5200, while it was slowest possible variant, like this one:

"Gainward FX PowerPack! Pro/660 TV-DVI

Barcode: 471846200-8057

core: 250 MHz 128 MB DDR memory 266 MHz 64-bit bus."

So finally, I've solved mistery about my inability to identify memories on FX5200 card I've got as first, that have -75B at the end, which made no sense for me at the start, as I've expected, they should be 400 Mhz.... It is Gainward. Problem solved. It looked so pro, so I've took it, with lack of knowledge, look at those solid state capacitors. It is looking as high quality stuff, while, you're getting worst possible FX5200 card, which I think is behind that group of people, that bashed FX5200 so badly in its time, and caused that earned reputation to be "worst game card ever" and "slower than MX440".

Now, I've found my second FX5200 card that I have, that looking not impressive, green PCB from jetway. It is this card

https://www.svethardware.cz/recenze-srovnani- … eon-9200/8991-2

except, my have -4 at the memory, so I've won a jackpot and have 4ns memory on that card. Not sure why, probably, later revision.

But even with overclock only 275core/500 mhz memory, we can see already, how close to FX5200 Ultra this card is (note: FX5200 Ultra is also overclocked):

https://www.svethardware.cz/recenze-srovnani- … eon-9200/8991-9

With 4ns memory, if you get them to 550+ mhz, and you also get more lucky with core, getting 300 mhz, I would say, FX5200 is budget beast, like 5% behind FX5200 Ultra. This card will smoke MX440, not to mention it supports DX 8.1. Bad card? For that price? Definetly not. When you compare it, what you've got just year before with much more pricey MX440, this budget beast FX5200, if picked right, could outperform even MX460, for half of the money, while offering DX8.1 support. That's not bad for card, that is on the lowest bottom of the serie (FX5100 was only OEM card)

Now look at the both cards. Could someone without proper knowledge know, that we are looking at the slowest and fastest variants of the FX5200 series? And the one that is beast is generic and plane looking green-PCB one, with common name (Jetway FX5200), with cheaper looking box? While more badass looking red one, with badass name, and nicer box is the slowest one possible. Probably not. Most people would pick blindly Gainward version and choosing this way slowest FX5200 possible. So this is in my opinion origin of "FX5200 is among worst cards ever" badge, in my opinion misjudgly earned.